It seems that if you label something “fake news” and add some pretty charts, a certain class of people will share it around like others share pictures of big breasted women or stories accusing Hillary of murder.

Consider this study published at CJR, which purports to be the “calculated look at fake news’s reach” that has been “missing from the conversation” (suggesting its author may be unfamiliar with this Stanford study or even the latest BuzzFeed poll).

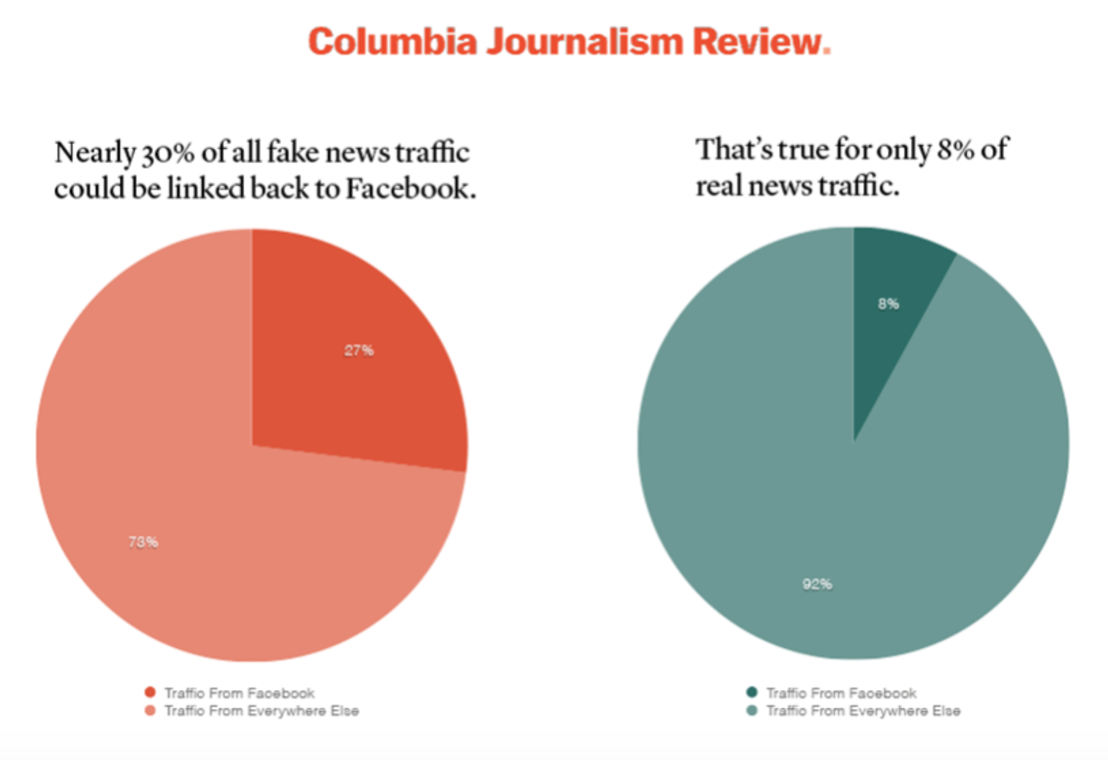

It has pretty pictures everyone is passing around on Twitter (the two charts look sort of like boobies, don’t you think?).

Wowee, that must be significant, huh? That nearly 30% of what it calls “fake news” traffic comes from Facebook, but only 8% of “real news” traffic does?

I’ve seen none of the people passing around these charts mention this editor’s note, which appears at the very end of the piece.

Editor’s note: We are reporting the study findings listed here as a service to our audience, but we do not endorse the study authors’ baseline assumptions about what constitutes a “fake news” outlet. Drudge Report, for instance, draws from and links to reports in dozens of credible, mainstream outlets.

Drudge may be a lot of things (one of which is far older than the phenomenon that people like to label as fake news). But it’s not, actually, fake news.

Moreover, one of the points that becomes clear if you look at the pretty pictures in this study closely is that Drudge skews all the results, which is unsurprising given the traffic that it gets. That’s important for several reasons, one of which being that Drudge is in no way what most people consider fake news, and if it were, then the outlets that fall over themselves to get linked there would themselves be fake news and we could just agree that our journalism, more generally, is crap. More importantly, though, the centrality of the Drudge skew in the study — along with the editor’s note — ought to alert anyone giving the study a marginally critical review that the underlying categorization is seriously problematic.

To understand whether these charts mean anything, let’s consider how the author, Jacob Nelson, defines “fake news.”

As has become increasingly clear, “fake news” is neither straightforward nor easy to define. And so when we set out on this project, we referred to a list compiled by Melissa Zimdars, a media professor at Merrimack College in Massachusetts. The news sites on this list fall on a spectrum, which means that while some of the sites we examined* publish obviously inaccurate news (e.g., abcnews.com.co), others exist in a more ambiguous space, wherein they might publish some accurate information buried beneath misleading or distorted headlines (e.g., Drudge Report, Red State). Then there are intentionally satirical news sources, like The Onionand Clickhole. Our sample included examples of all of these types of fake news.

We also analyzed metrics for real news sites. That list represents a mix of 24 newspapers, broadcast, and digital-first publishers (e.g., Yahoo-ABC News, CNN, The New York Times, The Washington Post, Fox News, and BuzzFeed).

Nelson actually doesn’t link through to the list. He links through to a story on the list, which at one point had been removed from public view but which is now available. The professor’s valuation, by itself, has problems, not least that she judges these sites against what she claims is “traditional” journalism (itself a sign of either limited knowledge and/or bias about journalism as a whole).

But as becomes clear, you see that she has simply listed a bunch of sites and categorized them, including “political” and “credible.”

*Political (tag political): Sources that provide generally verifiable information in support of certain points of view or political orientations.

*Credible (tag reliable): Sources that circulate news and information in a manner consistent with traditional and ethical practices in journalism (Remember: even credible sources sometimes rely on clickbait-style headlines or occasionally make mistakes. No news organization is perfect, which is why a healthy news diet consists of multiple sources of information).

[snip]

Note: Tags like political and credible are being used for two reasons: 1.) they were suggested by viewers of the document or OpenSources and circulate news 2.) the credibility of information and of organizations exists on a continuum, which this project aims to demonstrate. For now, mainstream news organizations are not included because they are well known to a vast majority of readers.

She actually includes (but misspells, with an initial cap) emptywheel in her list, which she considers “political” but not “credible.”

The point, however, is that sites will only be on this list if they are considered non-mainstream. And the list is in no way considered a list of “fake news,” but instead, a categorization of a bunch of kinds of news. In fact, the list doesn’t even consider Drudge “fake,” but instead qualifies it as “bias.”

So you’ve got the study using a list that is, itself, pretty problematic, which then treats the list as something that it’s not. Both levels of analysis, however, set up false dichotomies (between “fake” and “real” in the study, and between “traditional” or “mainstream” and something else (which remains undefined but is probably something like “online outlets a biased media professor was not familiar with”) in the list. Ultimately, though, the study and the list end up distinguishing minor-web-based publications, of a range of types, from “major” (ignoring that Drudge and a number of other huge sites are in the list) outlets, some but not all of which actually engage in so-called “traditional” journalism.

You can see already why aiming to learn something about how people access these sites is effectively tautological, as the real distinction here is not between “fake” and “real” at all, but between “web-dependent” and something else.

Surprise, surprise, having substituted a web-based/other distinction for a “fake”/real one, you get charts that show the “fake” news (which is really web-based) rely more on web-based distribution methods.

Nope. Those charts don’t actually mean anything.

That doesn’t mean the rest of the study is worthless (though it is, repeatedly, prone to the kind of poor analysis exhibited by misunderstanding your basic data set). The study shows that not many people read “fake” news (excluding Drudge), and that people who read “fake” news (remember, this includes emptywheel!) also read “real” news.

Ultimately, the entire study is just a mess.

Which makes it a far more interesting example — as so much of the “fake news” panic does — of how precisely the people suggesting that “fake news” is a sign of poor critical thinking skills that will harm our democracy themselves also may lack critical thinking skills.

Update: This post raises some methodology questions about the way BuzzFeed defines “fake news.”