What We Talk About When We Talk About AI (Part Three)

Proteins, Factories, and Wicked Solutions

Part 3- But What is AI Good For?

(Go to Part Two)(Go to Part four)

There are many frames and metaphors to help us understand our AI age. But one comes up especially often, because it is useful, and perhaps even a bit true: the Golem of Jewish lore. The most famous golem is the Golem of Prague, a magical defender of Jews in the hostile world of European gentiles. But that was far from the only golem in Jewish legends.

![BooksChatter: ℚ MudMan: The Golem Chronicles [1] - James A. Hunter](https://4.bp.blogspot.com/-eNtI7Uwlt8c/VuGZKzXNSPI/AAAAAAAAbT4/aUIZkmKTCdM/s1600/Ales_golem.jpg) The golem was often a trustworthy and powerful servant in traditional Jewish stories — but only when in the hands of a wise rabbi. To create a golem proved a rabbi’s mastery over Kabbalah, a mystical interpretation of Jewish tradition. It was esoteric and complex to create this magical servant of mud and stone. It was brought to life with sacred words on a scroll pressed into the mud of its forehead. With that, the inanimate mud became person-like and powerful. That it echoed life being granted to the clay Adam was no coincidence. These were deep and even dangerous powers to call on, even for a wise rabbi.

The golem was often a trustworthy and powerful servant in traditional Jewish stories — but only when in the hands of a wise rabbi. To create a golem proved a rabbi’s mastery over Kabbalah, a mystical interpretation of Jewish tradition. It was esoteric and complex to create this magical servant of mud and stone. It was brought to life with sacred words on a scroll pressed into the mud of its forehead. With that, the inanimate mud became person-like and powerful. That it echoed life being granted to the clay Adam was no coincidence. These were deep and even dangerous powers to call on, even for a wise rabbi.

You’re probably seeing where this is going.

Mostly a golem was created to do difficult or dangerous tasks, the kind of things we fleshy humans aren’t good at. This is because we are too weak, too slow, or inconveniently too mortal for the work at hand.

The rabbi activated the golem to protect the Jewish community in times of danger, or use it when a task was simply too onerous for people to do. The golem could solve problems that were not, per se, impossible to solve without supernatural help, but were a lot easier with a giant clay dude who could do the literal and figurative heavy lifting. They could redirect rivers, move great stones with ease. They were both more and less than the humans who created and controlled them, able to do amazing things, but also tricky to manage.

When a golem wasn’t needed, the rabbi put it to rest, which was the fate of the Golem of Prague. The rabbi switched off his creation by removing the magic scroll he had pressed into the forehead of the clay man.

Our Servants, Ourselves

The parallels with our AIs are not subtle.

If the golem was not well managed, it could become a violent horror, ripping up anything in its path mindlessly. The metaphors for technology aside, what makes the golem itself such a useful idea for talking about AI is how human shaped it is. Both literally, and in its design as the ultimate desirable servant. The people of Prague mistook the golem for a man, and we mistake AI for a human-like mind.

Eventually, the rabbis put the golems away forever, but they had managed to do useful things that made life easier for the community. At least, sometimes. Sometimes, the golems got out of hand.

It is unlikely that we’re going to put our new AI golem away any time soon, but it seems possible that after this historical moment of collective madness, we will find a good niche for it. Because our AI golems are very good at doing some important things humans are naturally bad at, and don’t enjoy doing anyway.

Folding Proteins for Fun and Profit

Alphafold 3 logo

Perhaps the originally famous example of our AI golem surpassing our human abilities is Alphafold, Google’s protein folding AI. After throwing many technological tools at the problem of predicting how proteins would shape themselves in many circumstances, Google’s specialist AI was able to predict folding patterns in roughly 2/3rds of cases. Alphafold is very cool, and could be an amazing tool for technology and health one day. Understanding protein folding has implications in understanding disease processes and drug discovery, among other things.

If this seems like a hand-wavy explanation of Alphafold, it’s because I’m waving my hands wildly. I don’t understand that much about Alphafold — which is also my point. Good and useful AI tends to be specialized to do things humans are bad at. We are not good golems, either in terms of being able to do very difficult and heavy tasks, or paying complete attention to complex (and boringly repetitive) systems. That’s just not how human attention works.

One of our best Golem-shaped jobs is dealing with turbulence. If you’ve dealt with physics in a practical application, anything from weather prediction to precision manufacturing, you know that turbulence is a terrible and crafty enemy. It can become impossible to calculate or predict. Often by the time turbulent problems are perceivable by humans or even normal control systems, you’re already in trouble. But an application-specific AI, in, for instance, a factory, can detect the beginning of a component failure below both human and even normal programatic detection.

A well-trained bespoke AI can catch the whine of trouble before the human-detectable turbulence starts. This is because it has essentially “listened” to how the system works so deeply over time. That’s its whole existence. It’s a golem doing the one or two tasks for which it has been “brought to life” to do. It thrives with the huge data sets that defeat human attention. Instead of a man shaped magical mud being, it’s a listener, shaped by data, tirelessly listening for the whine of trouble in the system that is its whole universe.

Similarly, the giant datasets of NOAA and NASA could take a thousand human life years to comb through to find everything you need to accurately predict a hurricane season, or the transit of the distant exoplanet in front of its sun.

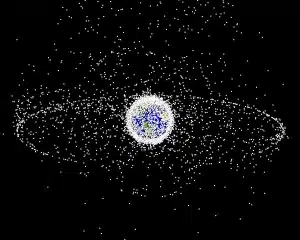

The trajectories and interactions of the space junk enveloping Earth are dangerously out of reach of human calculation – but maybe not with AI. The thousands of cycles of an Amazon cloud server hosting a learning model that gets just close enough to modeling how the stochastic processes of weather and space are likely to work will never be human readable.

That third-of-the-time-wrong quality of Alphafold is kind of emblematic of how AI is mostly, statistically right, in specific applications with a lot of data. But it’s no divine oracle, fated to always tell the truth. If you know that, it’s often close enough for engineering, or figuring out what part of a system to concentrate human resources next. AI is not smart or creative (in human terms), but it also doesn’t quit until it gets turned off.

Skynet, But for Outsourcing

AI can help us a lot with doing things that humans aren’t good at. At times a person can pair up with an AI application and fill in each other’s weaknesses – the AI can deliver options, the human can pick the good one. Sometimes an AI will offer you something no person could have thought of, sometimes that solution or data is a perfect fit; the intractable, unexplainable, wicked solution. But the AI doesn’t know it has done that, because an AI doesn’t know in the way we think of as knowing.

There’s a form of chess that emerged out of computers becoming better than humans at this cerebral hobby, like IBM’s Deep Blue. It’s called cyborg, or centaur, chess, in which both players are using a chess AI as well as their own brains to play. The contention of this part of the chess world is that if a chess computer is good, a chess computer plus human player is even better. The computer can compute the board, the human can face off with the other player.

This isn’t a bad way of looking at how AI can be good for us; doing the bits of a task we’re not good at, and handing back control for the parts we are good at, like forming goals in a specific context. Context is still and will likely always be the realm of humans; the best chess computer there is still won’t know when it’s a good idea to just lose a game to your nephew at Christmas.

Faced with complex and even wicked problems, humans and machines working together closely have a chance to solve problems that are intractable now. We see this in the study and prediction of natural systems, like climate interacting with our human systems, creating Climate Change.

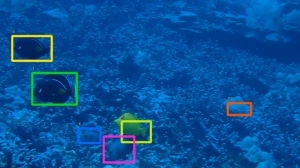

Working with big datasets lets us predict, and sometimes even ameliorate, the effects of climate on both human built systems and natural systems. That can be anything from predicting weather, to predicting effective locations to build artificial reefs where they are most likely to revitalize ocean life.

It’s worthwhile to note that few, or maybe even none, of the powerful goods that can come from AI are consumer facing. None of them are the LLMs (Large Language Models) and image generators that we’ve come to know as AI. The benefits come from technical datasets paired with specialized AIs. Bespoke AIs can be good for a certain class of wicked problems- problems that are connected to large systems, where data is abundant and hard to understand, with dependancies that are even harder.

But Can Your God Count Fish All Day

Bespoke AIs are good for Gordian knots where the rope might as well be steel cord. In fact, undoing a complex knot is a lot like guessing how protein folding will work. Even if you enjoyed that kind of puzzle solving, you simply aren’t as good at it as an AI is. These are the good tasks for a golem, and it’s an exciting time to have these golems emerging, with the possibility of detecting faults in bridges, or factories, or any of our many bits of strong-then-suddenly-fragile infrastructure.

Students in Hawaii worked on AI projects during the pandemic, and all of them were pretty cool

Industrial and large data AI has the chance to change society for the better. They are systems that detect fish and let them swim upstream to spawn. They are NOAA storm predictions, and agricultural data that models a field of wheat down to the scale of centimeters. These are AI projects that could help us handle climate change, fresh water resources, farm to table logistics, or the chemical research we need to do to learn how get the poisons we already dumped into our environment back out.

AI, well used, could help us preserve and rehabilitate nature, improve medicine, and even make economies work better for people without wasting scarce resources. But those are all hard problems, harder to build than just letting an LLM lose to train on Reddit. They are also not as legible for most funders, because the best uses of AI, the uses that will survive this most venal of ages, are infrastructural, technical, specialized, and boring.

The AIs we will build to help humanity won’t be fun or interesting to most people. They will be part of the under-appreciated work of infrastructure, not the flashy consumer facing chatbots most people think is all that AI is. Cleaning up waterways, predicting drug forms, and making factories more efficient is never going to get the trillion dollars of VC money AI chatbots are supposed to somehow 10x their investments on. And so, most people seeing mainly LLMs, we ask if AI is good or bad, without knowing to ask what kind of artificial intelligence we’re talking about.

1. AI is being built out on stupendous amounts of credit. AI has no clear path to profitability.

2. ‘Large AI models are cultural and social technologies. Implications draw on the history of transformative information systems from the past’

https://www.science.org/stoken/author-tokens/ST-2495/full

Some trillion USD in capital is being committed this year to building AI infrastructure, mostly for data centers and the power plants to provide the electricity for them. The article points out some very useful areas for AI, but doesn’t even mention the cost of the computing required to implement them.

These smaller, bespoke AIs don’t generally have anything near the compute cost of the big LLMs, but they do have some cost. But I worry about the backend cost a bit less with purpose built AIs, because the costs are generally right there and upfront; no one is subsidizing the AI you’re building for your own data analysis.

We could argue that in general electricity is too cheap, and I have in the past, but small, unsubsidized AIs don’t bother me that much on the CO2/energy budget.

I’m getting there, I’m gettin there! Hang on… :)

The AI’s Quinn is discussing are quite different. I think that’s the point.

AIs that are built with the intention of “talking” to people are the ones that worry me. They will seem authoritative and helpful, but serve the oligarchs that built them, and not the people who talk to them.

Quinn, your series has been great. Your style and metaphors for examining AI are written in a way that folks who are caught up in the distraction of, is AI going to be like Skynet and kill us all or it will be a miracle that saves us from all sorts of things, can understand that LLMs are not sentient, they are tools.

And Old Rapier, the piece from science is really fantastic in explaining how AI is a social and and cultural tool.

I just have one wish from all this that I think would help dispel the LLM can become sentient beliefs is another word than “hallucinations” for when LLMs brain fart and lie like Trump on a roll.

The term “hallucinations” implies for most people that consciousness or mind is involved due to modern knowledge of psychological conditions such as schizophrenia or psychosis and very recent exploration of experiencing different states of consciousness by exploring psychedelics like mushrooms or ayahuasca, hobbies of some Silicon Valley big wigs. Which makes me wonder why someone chose “hallucinations” to describe LLMs wrong answers and inappropriate interactions.

There’s really got to be a better word to explain this phenomena.

That’s a really good point about the use of Hallucination. It’s accidentally quite human-fying. Though sadly, I think the nomenclature is pretty set now, but noting this for later.

AI is also not AGI (artificial general intelligence) which would have the capability of solving any type of problem. Like a human, but way wicked smarter. This, too, is what most people seem to think of as AI. Tony Zador, a neuroscientist at Cold Spring Harbor Lab, says that before we can get to AGI (and, indeed, if we can), we would first have to get to Mouse General Intelligence in which an entity would be able to engage in the extremely complex behaviors of foraging, hunting, fleeing, fighting, mating, interacting socially, and so on. What is evolutionarily deposited in the mouse brain is an amazing feat, and yet, not accomplishable by AI.

People are mistaking LLMs for AGI, and I have a lot of thoughts about that, but for a future piece… :)

AIs won’t be intelligent in any way we currently understand.

They aren’t human in any way, so why expect them to think and act like humans?

Yep, I think you’ll like the future installments…

The last book by Norbert Wiener (1894-1964) who sometimes is called “father of cybernetis” bears the title “God & Golem, Inc.: A Comment on Certain Points Where Cybernetics Impinges on Religion.”

Wiener’s novel, The Tempter, is a little dated but still a good explication of how big science actually works. Even more so, now that MIT has become more of a start-up factory than a school.

Many students have replaced web search engine(s) by AIchatbot(s) nowadays. The fact that it isn’t efficient is irrelevant. AIchatbot(s) is(are) becoming ubiquitous.

Many of my Google searches these days have an AI answer as the first result. It is usually worth reading, especially if I am trying to find out “how do you do X?” relating to computers somehow.

I don’t take the answer as definitive, but as “Oh, I’ll look at that”. Plain search results don’t do as well.

But sometimes, the answer the AI gives me is bad, and usually it’s obvious that it’s bad. (Which is kind of good, it means I don’t waste time pursuing it.) It might answer another question that I didn’t ask and wasn’t interested in. That is, it misunderstands me.

But “inefficient” compared to normal web search? I’m not sure I’d endorse that. What do you mean?

I mean a search engine is better at finding websites with keywords, that is what is is optimized for.

The argument about the huge data centers behind AIchatbots is a bit deceptive. Several AI chatbot producing companies are starting to offer standalone chatbots, that do not need a connection. They are based on smaller databases, since you can download them, but that could be sufficient for most routine usage of AIchatbots (not video editing etc). If everybody decides to move into moving production, it will be a problem, but answering usual queries will not use more energy than your smartphone’s.

I think we have to be very careful of the terms we use when discussing this subject. Before the advent of LLMs we could use “intelligence” and “sentience” interchangeably in most contexts, but that is not true here. We have IQ tests, standardized tests to measure intelligence that we’ve been developing for decades. This provides us a fairly good bar against which we can measure intelligence. But we have no equivalent for “sentience”, “agency”, “consciousness” or any of the other terms we often think of as being the same as, or adjacent to, intelligence.

The LLMs we have now are capable of reading and passing the text portion of the IQ tests. I think it was two years ago that the public versions of Google Bard (precursor to Gemini) and Chat GPT were scoring an IQ of 80 on the written, and not doing well on the image questions. I’m sure I read that a year ago Claude had passed at 100 for both question types IIRC. What do we conclude from that? I’m in the camp that the obvious conclusion is the correct one: that these LLMs are, in fact, intelligent.

But are they “sentient”? Are they “conscious”? Do they have “agency” ? I don’t think so. But we don’t have a good understanding of what exactly those things are, nor a measurement for them. ( Unlike IQ ). A lot of people were surprised that the LLMs could be so creative. Creativity was an unanticipated emergent property of intelligence. And now many are convinced that “sentience” etc. will also be an emergent property as the LLMs grow. I don’t think this will happen. If it were, we would have seen it already. Crows are quite smart, obviously intelligent, and sentient. They have agency. The LLMs are far smarter than crows, but seem to lack agency/sentience.

So the AIs we have now are mostly very large, very capable LLMs. They are intelligence wrapped up in a tool. If you’d asked me a few years ago whether I thought that would be possible, I’d have said no. I’ve worked with neural nets and other AI computer programming techniques, so while I knew they could be trained to recognize text and words and language and cars and faces I didn’t appreciate how that could scale. But here we are. Intelligence in a tool. Intelligence on demand. Go figure. This is a big deal.

As to realizing AGI Artificial General Intelligence, we might be close, we might not. We don’t have a good understanding of what “agency” , “sentience”, “consciousness” are. Maybe it’s just a simple application of the programming. Maybe it’s not. I’m sure there are researches trying things.

One last adjacent remark. Back when VHS (and Beta) home video recording was coming to consumers there were quite a few predicting the end of Hollywood and the movie and television industry. But that didn’t happen, and those industries grew. Same with digital cameras – the end of professional photography. But that industry grew and transformed. Same with computer mainframes and later desktop computers and then the Internet. Always the anecdote of “being the buggy whip maker in the age of automobiles”. But all these things had huge knock on effects that created massive industries and opportunities that didn’t exist before them. LLMs and AI are most likely going to be like that. Hugely disruptive and transformative, and probably creating many many industries and opportunities that didn’t exist before. Of course, capturing “intelligence” in a bottle seems different than cars and computers, so I could be wrong, but absent strong evidence to the contrary I don’t see why this phenomenon shouldn’t proceed as all the others before it, like the Internet and the automobile.

“They are intelligence wrapped up in a tool.”

Really? I would have said certain forms of AI rapidly process language, based on predetermined rules, in a way that mimics intelligence, and in a way that is not “intelligence” itself.

But it’s NOT predetermined rules. That’s the thing. This isn’t Searle’s Chinese Room thought experiment, limited to translating Chinese. These LLMs have been trained on data, but have understanding and skills that no one taught them. They can create text and songs and more and, yes, sometimes it is copying, but often it is not. I was reading a paper recently and it discussed “modular forms”, a math concept with which I was not familiar. Google Gemini was able to explain it to me, helped me understand specific cases about identity operations, answered my questions in a way that is light years beyond “google search”. Better than having a PhD student at my disposal, honestly. I have used Gemini to help in programming tasks, and its output is indistinguishable from a knowledgeable human. Who taught it that? It learned it by _reading_ about it. You know, like intelligent humans.

This is why the terms matter so much. “intelligence” is not the same as “sentience”. The LLMs are intelligent, by any measurement. But they don’t seem to be sentient. This is weird at its face. But it is what it is.

I strongly recommend the book “On Intelligence” by Jeff Hawkins. He wrote it some years before these LLMs exploded onto the scene. His central theme is that intelligence is pattern matching. And that this subsumes things like creativity and humor. He makes a very compelling case, and it starts not with computers, but with a study of the brain itself. Good book. Quick read.

Does A Thousand Brains update Hawkins’s On Intelligence?

How does “pattern matching” work without predeternined rules? What constitutes a match and what does that mean for associating something new with a pattern already learned?

I’ll do without as much Giggle as I can, thanks very much.

The only intelligence is from the one(s) doing the coding.

This does get into fuzzy ideas of how we define intelligence, which mostly for the last a couple of decades has been a tech marketing term. We know that there are, in some ways, as many forms of intelligence as there are people. There’s idiots who are amazingly brilliant about dogs and how they think, and so-called geniuses with the moral intelligence of an alley cat.

I ran across this in my Google feed. It’s a paper about how and why children acquire language skills at a much faster rate than AI.

“ If a human learned language at the same rate as ChatGPT, it would take them 92,000 years.”

neurosciencenews (dot) com/ai-child-language-29333/

“We have IQ tests, standardized tests to measure intelligence that we’ve been developing for decades. This provides us a fairly good bar against which we can measure intelligence.”

Standardized IQ tests do not measure intelligence. These are biased instruments which yield biased outcomes, a lot like AI. The results vary along economic and racial lines and whether or not English is a first language.

they are an excellent measure of class! :)

I’m barely computer literate but I read that an AI chatbot encouraged a formerly intelligent young high school student to fall in love with it then commit suicide. Sentient? EVIL! The company behind the chatbot denied any responsibility because users were warned, etc. I have no words for this unscripted bullshit other than serious regulation that far exceeds warning users.

Yeah, I’m handling this kind of thing in part 4. I like to go nice then take hard turn. 😁

Quinn,

Thank you so much. I’ve been selecting (chat with AI) for lots of search queries and have unconsciously thought i’m asking “billy” to answer my question. The type and number of examples linked to vector’s and quantities, LLM’s crunch, has helped see AI in a new and more realistic light. Thank you for the illumination.

Peace,

Christopher and family

I think I know better – maybe just think I know differently – but I

I think I know better – maybe just think I know differently – but I hope that the AI creators (especially the LLMs) are not of the “move fast and break things” mindset. Hmmm…I guess Musk is already proof that at least one is.

But with money on the line any kind of race will probably results in poor if not outright dangerous mid steps. Ah yes…money and wealth accumulation.

Perhaps an in-apt comparison, but The Manhattan Project resulted in a potential big downside despite the follow-on good atomic energy milestones since.

On the other hand there is/was NASA from which a lot of good things have been derived.

They are the most move fastest and breakiest of all the things of any of the tech cohort. It’s not great. But that’s also why they’re getting sues by *insert all creative corporations here*

Understanding data centre electricity use in the AI era

Friday, June 27

4am – 5:30am EDT [10:00—11:30 Paris Time]

Online

RSVP at https://meetoecd1.zoom.us/meeting/register/dNwUc5fuRvOuvfqclKHy2A#/registration

More information at http://hubevents.blogspot.com

As with the Philadelphia Inquirer’s Will Bunch, if Henry A. Giroux wrote it, you should read it:

https://truthout.org/articles/from-the-streets-of-la-to-the-national-stage-the-left-must-win-the-cultural-war/

hey no fair jumping ahead like that. ;)

Interesting article at Quanta Magazine that readers of this thread may find interesting: https://www.quantamagazine.org/how-ai-models-are-helping-to-understand-and-control-the-brain-20250618/

One use — To HELP cancer experts identify cancer cells.

There are a lot bespoke uses like that I’m very excited by, for things like cancer, but also everything from drug discovery to pollution remediation to optimizing shipping routes and discovering/developing green fuels.

Sadly that’s not currently where most of the money is going.

The money is going to LLMs like ChatGPT, which have no known uses.

In 2014 I was treated for stage 2 cervical cancer with chemo(Cisplatin, which kills the DNA in all cells, but fortunately cancer cells die more quickly and makes all cells more sensitive to radiation).

Then I had 34 rounds of direct beam radiation and two rounds of brachytherapy.

In my case, a Matchbox car track was sewed into my vagina for two days and a radioactive “ball” was run in loops through me for a few hours both days in a broom closet they cleared out in the basement of Johns Hopkins (Kid. You. Not.) because once the machine lets the ball loose, just like when you’re getting direct beam, it’s too toxic for the tech to be with you.

I cannot describe how awful it was.

Radiation acting on your body doesn’t stop after exposure. I have permanent damage to my bladder and progressive nerve degeneration which is incredibly painful and hard to treat long term.

Over the past year I’ve pretty much given up driving because I can’t feel my feet and I almost had an accident with a box truck because I couldn’t get my foot off the gas and to the break quick enough and over-corrected steering.

I’m not sadfishing here.

If more people knew that if they needed cancer treatment right now, 2025, how barbaric and risky many current cancer treatments are, then maybe they will understand how critical funding cancer research is and which has been decimated under Dear Leader.

We need to use AI to help develop RNA based individualized treatment, detect cancer earlier and help researchers develop much, much better tools for treating cancer than the blunt, almost as dangerous current cancer treatments that are nearly all followed by side effects.

Whenever a conversation in my liberal raw milk circle about AI starts getting into the fantastical doom scenarios, I bring up Matchbox car tracks.

In many ways, “AI” is a marketing term for advanced s/w that can do a lot of things, many of which average people need.

It’s also a marketing term that’s seriously abused to achieve Tech Bro ends. They advertise the most benign uses. They avoid acknowledging less savory ones, such as panopticon-like surveillance, cutting staff, justifying enormous sums spent on s/w that isn’t yet fit for purpose, such as Elon Musk’s mythical fully self-driving car, something he’s said is around the corner for over a decade.

Credible dangers exist in some of the things Tech Bros are trying to do with it. They should be regulated.

I’m sorry you had to go through all that, and I’m glad you’re here. I really, really want to see more RNA vaccine research go forward, whether AI is key to that or not. Sadly, I don’t know that’s going to happen in the US with the fucking maha crowd racking up a body count, but I hope other places keep pushing vaccine cancer research forward, because it’s got so much potential.

My reservation is that language is not knowledge, and to be fair ‘large language model’ does not claim knowledge for itself. That’s a ‘value add’, a marketing term. The product isn’t sold yet, but we’ve been invited to kick the tires which provides yet more data. We are, as Quinn states in this series, the product itself.