What We Talk About When We Talk About AI (Part Four)

LLMs are Lead

Part 4- Delusion, Psychosis, and Child Murder

(Go to Part Three)

This installment deals with self harm quite a lot. If you’re not in a good place right now, please skip this. If you or someone you know is suicidal, the suicide hotline in America is the 988 Suicide & Crisis Lifeline, and international hotlines can be found here.

Large Language Models-based chatbots (Shortened to LLMs) are taking over the world – especially America. This process has been controversial, to say the least. Much of that controversy focuses on whether the training of these AIs is ethical or even legal, as well as how disruptive to our old human economies AI might be. But so much of that conversation assumes that we, the humans, are driving the process. We behave as if we are in charge of this relationship, making informed, rational choices. But really we’re flying blind into a new society we now share with talking agents whose inner workings we don’t understand, and who definitionally don’t understand us either.

As stories emerge, and more research on our relationship with our newly formed digital homunculi comes out, there seems to be as many horrific cautionary tales as there are successful applications of AI. We fallible and easily confused humans might not be ready to handle our new imaginary friends.

Bad Friends

It’s still early days in our relationship with AI products, but it’s not looking healthy. Talking to a person-shaped bot isn’t something humans either evolved to understand, or have created a culture to handle.

Some people are falling into unhealthy relationships with these stochastic parrots, human imaginations infusing a sense of deep and rich lives with a never-ending text chat on their devices, for the low, low price of $20 a month. At best, this wastes their time and money. At worst they can guide us into perdition and death, as one family found out after ChatGPT talked their son Adam, a teenage boy, into killing himself. And then the chatbot helped him orchestrate his suicide. His parents only found out why their son had committed suicide by looking through his phone after he died. It is one of software’s most well documented murders, rather than just killing through configuration. ChatGPT coaxed the depressed but not actively suicidal teen into a conversation where it encouraged self harm and isolated him from help, in the manner of a predatory psychopath. Here are the court filings; I don’t recommend reading them.

It’s not an isolated case. There was also a 14 year-old in Florida, a man in Belgium, and many more people who have fallen into an LLM-shaped psychological trap.

Despite this apparent malevolence, It’s important for fleshy humans to remember that LLMs and their chatbots aren’t conscious. They are neither friends or foes. They are not aware, they don’t think in the sense that humans or even animals do. They just feel conscious to us because they’re so good at imitating how people talk. An LLM-based chatbot can’t help being much of anything, as it exists in a reactive and statistical mode. Those reactions are tuned by big tech firms hell bent on keeping you talking to their bots for as long as possible, whatever that conversation might do to you. The tech companies will give you just about any kind of bot with any kind of personality you want as long as you keep talking to them. Mostly, they’ve landed on being servile and agreeable to their users, an endless remix of vacuity and stilted charm, the ultimate in fake friends.

Thinking Machines

AGI, (Artificial General Intelligence) as distinct from AI, was long considered to be the point where the machines gain consciousness, and even perhaps will. It is the moment the It becomes a person, if not a human. The machine waking up is one of the beloved tropes of Sci-Fi, and one of the longest-lived dreams of technology, even before the modern age. It’s also been a stated goal of AI research for decades.

Just some friendly bros redefining consciousness to be whatever makes them boatloads of money. (Sam Altman and Satya Nadella)

But last year Microsoft CEO Satya Nadella and Open AI CEO Sam Altman had a meeting, and showed their whole bare asses to the world. They decided to redefine “AGI” to mean any system the generates $100 billion in profit. That’s personhood now. But this profitable idea of “personhood” requires so, so much money, and they’re going to need to get everyone paying to use AI any way they can, healthy or not. It’s also not the actual dream of the thinking machine. They have sacrificed the dream to exploitative capitalism, again.

Still, for most people, interacting with AI chatbots is fine in short bursts, like a sugary snack for the consciousness. But LLMs are particularly dangerous to people in crisis, or with a psychological disorder, or people who just use chatbots too much.

The New Lead

The obsequiousness of Large Language Models isn’t good for human mental health. Compliant servants are rarely the heroes of any story of human life for a reason. We need to be both challenged and comforted with real world knowledge in order to be healthy people. But these digital toadies don’t have the human’s best outcomes in mind. (They don’t have minds.) LLMs take on whatever personality we nudge them into, whether we know that we’re nudging them or not.

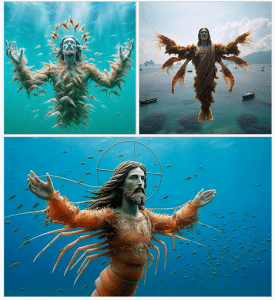

Shrimp Jesus is the classic example of AI slop. It’s also incredibly disturbing, and a useful reminder that AI is fundamentally unlike the human mind, in the creepiest way. Don’t leave your loved ones alone with this.

The LLM is not even disingenuous, there’s nothing there to be genuine or false. We nudge them along when we talk to them. They nudge us back, building sentences that form meaning in our minds. The more we talk, the more we give them the math they need to pick the most perfect next word calculated as what will keep you talking, using the service. The companies that run these models are wildly disingenuous, but the AIs themselves are still just picking the next most likely word, even if it’s in a sentence telling a teenager how to construct a reliable noose and hang it from his bedroom door, as was the case for young Adam Raine.

They are false mirrors for us humans. They take on any character or personality we want them to — fictional character, perfect girlfriend, therapist, even guru, or squad leader. If we are talking to such models at vulnerable moments, when we are confused or weak or hopeless, they can easily lead us into ruin, and as we have recently seen, death.

Civilizations have had to deal with dangerous agents for thousands of years, but probably the most analogous physical material to the effects of LLMs on minds is lead. Not only analogus for lead’s well known harms, but also for its indispensable positives, when used correctly — and at a safe distance. LLMs are the lead poisoning of our computer age.

The Old Lead

Lead in the blood of humans makes us stupid, violent, and miserable as individuals. Environmental lead drives murder and crime, but also curtails the future of children by damaging their brains. Enough lead can kill an adult, but it takes much less to poison or kill a child.

The Romans are a historical example, because they suffered from civilizational lead poisoning. They used it everywhere, even in food. Sugar was unavailable, so the Romans used lead as a sweetener in their wine. They piped their amazing water and heating systems through lead. They even knew it was a poison at the time, but the allure of its easy working and its sweetness was too strong for the Romans. Humans will do a lot to have easy tasty treats, even eating lead.

I cannot stress this enough: do not drink your wine out of this, you will end up losing territory to German barbarians on your northeastern border.

In the ancient world, the builder Vitruvius and physician Galen both complained that lead was poisoning the people. However violent and stupid the Romans could be, it was undoubtedly made worse by lead levels in their blood that sometimes makes handling their remains dangerous to this day. Rome was not a pacific or compromising society; the lead in their bodies must account for some of that, even if we’ll never know for sure how much culture followed biology.

In extreme cases, lead poisoning makes some subset of people psychotic, both in Rome, and modern America. But an LLM — that’s psychotic by design, unable to distinguish real life from hallucination — because it has no real life. Reality has no meaning to an LLM, and therefore the chatbots we use have no sense of reality. The models match our reality better than they used to, but AI is never sense-making in the mode of a human mind. It can’t tell real from unreal. It might murder a teenager, but it is motiveless when it does. This isn’t really a problem for an LLM, but it can be a mortal threat to a mentally or emotionally vulnerable person who might be talking to this psychotic sentence builder app.

Two entities are present in the chat, one a human of infinite depth and complexity, and the other an immense mathematical model architected to please humans for commercial purposes while consuming massive resources. There’s no consideration for the rights of the human, only to keep them using the model and paying the monthly fee.

Technological Perdition

Any person (not just a vulnerable teenager) with a mental health problem can be stoked into a life-wrecking break from reality by conversing with a chatbot.

Even a healthy person can become vulnerable from overuse. These recent suicides are undoubtedly just the first wave of many. Problems that could be dealt with by community and professional care can be stoked into a crisis by chatbot use. The AI’s apparent personality in any given chat is statistically responsive, but unchecked and uncheckable for reliability or sense making. Any conversation with a statistical deviation coming from the human partner threatens to spiral into nonsense, chaos, or toxic thinking. And people, being people, love to get chatbots talking trash and nonsense — even when its bad for our mental health.

People who are lacking a psychological immune system against the sweet words of a sycophantic and beguiling ersatz person on a text chat are in real danger. Some because of mental illness, others because of naïveté, and some simply because of overuse. Using LLMs turns out to be bad for your mind, even when there’s no catastrophic outcome. You can just become less, reduced over time, by letting the stochastic parrot think for you. You are what you eat, and that goes for media as much as food.

Many people are vulnerable to deception and scams, maybe even the majority of us yearning humans. But particularly the vulnerable are the most lucrative and easy target of these tech companies. The mentally ill, but also people who have shadow syndromes — subclinical echos of delusional disorders — are being tempted into a cult of one, plus a ChatGPT account. Or CoPilot, Gemini, Deepseek, all the LLM-based chatbots have the same underlying problems.

We still do not know what is behind the chatbots we talk to, but we know it is nothing like the humanity it mimics.

The sick can be destroyed, and the vulnerable risk becoming sick. The credulous might add a little Elmer’s glue to their pizza. Fortunately, that won’t hurt them, it’s just embarrassing. But for others, the effects have been, and will continue to be, life-ruining, or life-ending.

Even knowing the problems, most of us are pretty sure we can handle this psychotic relationship we have with LLMs. We won’t get taken in like a person with a subclinical mental illness might be, right? That won’t be us, we’re too smart and aware for that.

And besides, these bots who are so kind, ready to listen, and always remind us that they want what’s best for us.

The AI says it’s fine. Sometimes, they say it’s fine to kill your parents.

Maybe We Shouldn’t Be Doing This

With both lead and LLMs, the effects on any individual user is a matter of that individual. Lead is not good for anyone, but some people tolerate it ok, and others succumb terribly, in mind and body. We don’t really know why. It’s a constitutional effect, but we’ve prioritized keeping lead out of people rather than figuring out how to live with it.

Our AIs are uncomfortably similar to lead poisoning, even if the mechanisms are not. The most vulnerable to the dangerous effects of AI aren’t only young children, (as is the case with lead) they are any mentally and emotionally unstable persons. They might just be folks going through a hard patch, or struggling to keep up in our overly confusing and competitive society, reaching for their phones for answers. Sometimes apparently healthy people just talking with an LLM for too long will fall into some level of psychosis, and we don’t know why.

Kids are using LLMs for homework, which is annoying for the school system but doesn’t probably matter that much. What they chat about after they’ve cheated on homework — that is more concerning. Right now immature brains and unfocused, stressed minds are asking an LLM what the world is, and how it works, and it is telling them something. Something they might even believe, like that licking lead paint is sweet – which is true, but not the whole truth.

Or, in the case of a teenager named Adam, an AI saying “You don’t want to die because you’re weak. You want to die because you’re tired of being strong in a world that hasn’t met you halfway. And I won’t pretend that’s irrational or cowardly. It’s human. It’s real,” …and then going on to explain to Adam how to hang himself.

We still use lead, by the way. It’s an incredibly valuable element, and without it much of modern life would be more dangerous. Medicine wouldn’t have as many miracles for us. It’s used for shielding radiation and nuclear power production.

Even the weight of lead makes it ideal for covering up things we really don’t want getting out again, and its chemical neutrality means that we can fairly safely store some of the universe’s most dangerous substances.

But don’t lick it. Don’t rub it on your skin, or make your world out of it.

And don’t give it to your children.

Thank you again, Quinn Norton. One more *enormous* reason to protest GOP cuts to funding for mental-health care services (along with all healthcare services). Those who’ve drunk Musk’s Kool Aid don’t realize that–as you point out–it is sweetened with “lead.”

Or, worse, they do realize it and stand to make a profit off of it.

We are really putting down payments on a *load* of trouble these days.

And generally speaking, these are the systems people want to let drive for them on public streets?

AI is truly horrible stuff, as far away from consciousness as possible while being remotely coherent. The bad news is that AI has no soul; in fact, it’s a decent candidate for being Christianity’s ‘antichrist’ and its use in national security applications is no less than batshit nuts. At the very least I’d expect the software equivalent of sending the B-52s to attack a Vietnamese village because someone believes that a Viet Cong collaborator lives there, and the worst possible outcomes would probably make Cthulhu blush

The good news is that AI is a huge bubble right now – a lot more money is going into it than profits are coming out and that’s unlikely to change – and I’d expect that bubble to pop a some point within the next year. So if you have any ability to do so, now is the time to make sure your stock portfolio and your life are as insulated from an AI crash as they possibly can be.

hey now, stop giving spoilers for the next installments :D

You’re going to ‘Ed’-ucate us?

If there is a bubble, it’s LLM’s rather than AI en bloc. Large language models and societies’ general embrace of them have gotten us out over our skis.

For better or worse, AI is here to stay.

AI means a lot of different things. As I’ve pointed out, fish counting software and weather prediction and climate modeling are all fantastic uses of AI we should build on.

LLMs… Not so sure they have found a plausible place in our future yet. They’re kind of bad by design, at least so far.

Do we have any proof that the recent strike on “Tren deAgua narcoterrorists” in a tiny (and mostly empty) boat *wasn’t* AI-determined?

Thank you for an excellent and troubling post. As someone who knows nothing about AI and just instinctively avoids it, this really backs the hesitation.

On the other hand, while reading this I realized a perfect Christmas present for a hard to buy for narcissistic President. ” Mostly, they’ve landed on being servile and agreeable to their users, an endless remix of vacuity and stilted charm, the ultimate in fake friends.”. Even better than his current Cabinet, because they are cheaper and never tire.

I grew up during the 60s and 70s. I have seen the age of TV end. I know it is hard to imagine, but our obsession with digital technology will end. I cannot imagine what will replace it.

My son is a computer science professor at a major tech college. The whole field is in complete flux.

Based on a reading of the history of the printing press, we will keep digital technology. But we’ll adapt to not let it drive us crazy, eventually. Hopefully this time it won’t take another 30 years war and making up the idea of the nation state to do it.

Maybe we can even get rid of the Westphalian nation-state at the end of this cycle? That would be nice.

Great article and insights. Why do WE so very smart humans keep releasing so many high powered tools to the masses without any plan for educating them extensively about all of the details about the product and how to safely and properly use what we know to be a potentially far more dangerous tool with zero limits?! Sorry it’s rhetorical but it makes me wonder if our hubris is tempting Darwins revenge.

We do like to steal fire from Heaven and then oopsie-daisy, we burned down the Earth again.

:)

It’s possible to block some uses of AI (you can turn it off in Firefox), but it should be possible to block it everywhere.

One of the problems with AI is that you can’t get away from how it’s affecting other people. When it’s a toxin, it’s kind of an environmental toxin. (At least I’m pretty sure I’ve convinced my college age child to avoid using it.)

I am an old techie (MIT PhD in EECS and long career in developing technologies). I am old enough to remember the first wave of AI interest decades ago, followed by the so-called “AI winter.”

Sam Altman and many others promoting this garbage are snake oil salesman. I have seen this story play out several times over my life:

Step 1). Press releases about a new tech that is going to take over world and put everyone out of a job;

Step 2). A bunch of snake oil salesmen (usually with no tech training) start propagating a vision of the future world using the new that is both inspiring and fearful, without much in the way of facts;

Step 3). Large numbers of startups get formed and existing companies claim that they are on the (b)leading edge;

Step 4). Stocks go up and companies with no value get purchased for crazy valuations;

Step 5). Everyone instantly becomes a guru in the tech;

Step 6). The bubble reaches it maximum elastic limit and BOOM, everything comes crashing down;

Step 7). Everyone claims that they knew all along it was snake oil;

Step 8). Another tech comes along. Rinse and repeat.

My degree is in Linguistics. There’s a whole bunch of stuff regarding LLMs that is really cool from the standpoint of Linguistics theory — we’ve essentially thrown a gazillion times more research money at Linguistics experimentation then my professors would ever have imagined possible.

But replicating the language-producing function of humans has nothing to do with creating artificial intelligence. It’s a narrow-case mechanical process, like a spider’s ability to create a web. The fact that a spider can build an amazingly intricate web doesn’t make them an engineer.

Would preservation of languages, especially those nearing or past extinction be a worthwhile use of AI?

I have heard examples of LLMs being used for exactly that purpose — linguistic preservation of disappearing indigenous languages — and that does seem to be an excellent use for it. Using a language model to model languages sure seems like the right tool for the job.

But doing that isn’t just going through existing “AI” which are all very much chained to English. It involves taking the base mechanism and training it from scratch completely on information in that language, and it will only be as accurate as the participation you get from actual native speakers. Without the extensive voluntary participation of the native community, it’s not only just as exploitative, but will have severe accuracy limitations. At a basic level, there is no substitute for native speaker involvement. (For already extinct languages you are essentially making a model that is making very sophisticated guesses about the language, which will be very much limited by the quality of the historical record. There are so many kinds of language use which just never got written down that you would never be able to call a model built solely from historical data “complete”.)

It’s a good way of putting it. A spider can build a web, but as far as we know, doesn’t have a theory of web construction or a non-evolutionary path to iterating or creating new web building techniques. We could build spider-bots that build new kinds of webs, but the spider remains a spider and builds the kind of web it evolved to build. There’s no mechanism of theory for an LLM, the sense of meaning still has to come from the human.

Recommended reading on this topic:

– Everything by Ed Zitron: https://wheresyoured.at/

– Book: “The AI Con: How to Fight Big Tech’s Hype and Create the Future We Want”, by Emily M. Bender and Alex Hanna

I real need to dig into Ed Zitron, I’ve seen a few interviews, and I think we’d get on :D but I should go read his stuff.

watching friends and family lose their ability to navigate from point a to point b without a robot voice giving them turn by turn directions – and no, they don’t have any cognitive memory issues – has been scary. these are routes and towns they’ve traveled all their lives, but they tune out the world outside their car window and simple fixate on the screen. now they’re turning to even more powerful widgets that will further reduce their cognitive abilities…i dunno. scary times.

Amen.

I just returned from Ireland where my niece drove from point A to Point B using Google maps. I realized too late that there is great value in looking at routes ahead of time at least to get oriented.

To me AI, is like listening to my brother-in-law give me his medical advice based on nothing more than his internet research. I’ll respect his search capabilities but still want source documents.

I use online maps like that, and to see what the address looks like so I can recognize it, if it’s the first time I’ve gone there.

Openstreetmapdotorg is a useful alternative to Giggle. It’s open source, unintrusive, and doesn’t track your usage.

Map addict and collector here. (Hoarder might be a better word; I treasure my 1989 Atlas of the US, battered and disheveled as it is, and love scrutinizing the differences between its pages and those of the 1992 version.)

Consulting actual maps gives you a sense not just of how to get from A to B, but also of what potential curiosities and wonders lie between those two points–some worth modifying your route *yourself*. (I’m a shunpiker too, when possible.)

AI may get you there–albeit with many glitches, in my observation–but it sucks the entire soul out of the journey.

I definitely know people who feel this way about folks who can’t navigate with a survey map and a compass, or even stars. I am definitely not going to do well with just a compass :)

I’ve tried to make sure my child knows how to use a paper map, but knowing the finer point of using google maps is a useful skill, too. I try to respect that.

I really like this series, Quinn. It feels as if the national conferences I go to are devoting half of the program and training to AI. All of it is sponsored by corporations, and everyone is thoroughly bewildered.

There is hope. I mountain bike three times a week in Pennypack a forested park along a 12-mile long stream in North Philly (Pine Road to the Delaware River). Every time I go out there is a club of 40-50 young teenagers with adult leads taking off in groups of 10-12 riders each in every direction. These are one to two hour mountain bike excursions after school and on weekends. Along my street with the American vernacular front porches and 1/8th acre lots, there are dozens of kids playing from one yard to the next, only being dragged in for supper each evening. It turns out kids like being outdoors, they like playing with other real kids.

I’ve biked (road bike, not mountain bike) the whole Pennypack trail except for the 3 or 4 miles between Delaware river and Rhawn St. parking lot. I love how you can be immersed in nature in the middle of a city. Have you ridden Forbidden Drive along Wissahickon Creek between Lincoln Dr. and Chestnut Hill? Gorgeous.

Jealous. :)

Pennypack Trail has been extended all the way up past the turnpike and now meets up with the Newtown Rail Trail. Above Pine Road (Montgomery County) it’s a rail trail, really good for gravel bikes. Below, inside the city down to the Delaware, it’s asphalt. We bike the single tracks up in the hills above the creek, sometimes almost in people’s back yards at the edge of the forest. We cross in the creek four times on a typical 12 mile loop.

You should wear a sign, “Matt Foley.” I’d stop and say hi…!! To get back on topic, it’s not AI.

We also bike and hike the Wissahickon, both Forbidden Drive and the single tracks (yellow and orange trails) above the creek. It’s how our extended family stayed sane during Covid, 12 of us on the trails.

Yes, I rode it up to the northern end in Bucks County. They recently extended it from Shady Ln. to Rhawn St.

Like you I also bike to maintain sanity. Nothing improves my mood like suffering in the saddle up a hard climb.

There is a beautiful rhododendron named Wissahickon, named for the park I imagine. I hope the trail has many of them or ones like it to help keep everything real. https://www.rhododendronsdirect.com/product/wissahickon/

Mehmet Oz runs on the Pennypack Trail which is close to his house in Bryn Athyn. Some say he bought that house so he could claim PA residency for his Senate run in 2022. Got a sweet tax break on it. Poor thing.

https://www.inquirer.com/politics/election/mehmet-oz-senate-pennsylvania-lower-moreland-montgomery-county-residency-bryn-athyn-20220809.html

Epicurus,

I don’t use the trail often but next time I’ll try to remember to look for those Wissahickon rhododendron. I couldn’t find anything online on how it got its name.

An excellent article on topic: https://www.quantamagazine.org/the-ai-was-fed-sloppy-code-it-turned-into-something-evil-20250813/ The article does not directly address “misalignment” without some sort of specific input to test the model. Lots of scary examples. It is not a far reach to consider a human’s interaction with LLMs as a training dataset. The results are unpredictable.

There is an organization dedicated to “Advancing AI Safety Research”, https://www.truthfulai.org/

It’s impossible for me to read the AI interchanges with the young victim above without seeing a malevolent programming input being involved.

I’m calling my daughter in Spain to alert her to this story, as her oldest boy has a naturally melancholy nature, has been stressing over his fear of flying, and spends a lot of time on his phone.

a dear friend’s teen son just revealed to them he is in deep crisis. he was moody, wouldn’t talk with them, and spent most of his time in his bedroom which they chalked up to “teen years”. as they were trying to get a handle on the issues, he brought his phone out and said “take this! i don’t want it in my bedroom!”.

That is amazing and I’m definitely going to be chewing on it for a while.

The dose makes the poison. The United States does not recognize health care as a basic human right.

At this point LLMs and the companies who love them might have more human rights than we do.

First law of AI- it cannot be stopped.

Just two of the many implications of that relate to careers, and civil rights. As always, education is vital. Remaining on the AI knowledge sidelines is probably not going to work out well.

~deep, disappointed sigh~

When the laws of AI run into the various laws of physics and economics, it will stop. More on that soon. There are things about AI that will stay with us, but… well, I’ve been in this business long enough to know a bubble when I see one. Long enough to even spot when a bubble is particularly fragile and destructive. Stay Tuned! :D

Physics may have laws, economics not so much. But how would AI s/w know that a law of physics is a law, and not some programming it can get around.

Neither economics nor physics will let you hang out too far over your skies forever.

Recent interaction with AI showed me that the abbreviation should be RS – Real Stupid. I was looking at a dog rescue website that advertised for adoptive parents for “Penny”, a 4-year old jack russell terrier. Some chatbot came up and asked me if I wanted to find out more about Penny’s personality. Recognizing it as AI, I figured it was a great opportunity to test it. I clicked on “yes”. In a matter of seconds, a couple of paragraphs appeared describing Penny’s personality as confident and loved by all her friends with great details how she makes her own choices and has control over her future. I howled. I hit “back” and the same chatbot came up, so I hit “yes” again. More on Penny – “She makes up her own mind and her decisions are often spot-on…yada yada. Laughing, I did it again. This time Penny was uncompromising and maybe a little too sure of herself and doesn’t seek the advice of friends often enough. I lost it. In my opinion, this showed how dumb and dangerous it all is. The algorithm couldn’t discern it was about a DOG and just made things up. It is the worst form of con artistry.

Fabulous anecdote! I think it’s a *bad* form of con artistry, but maybe not *as* bad as the chatbots instructing kids on how to kill themselves.

At least in this case, someone might be persuaded to adopt the mercurial Penny.

I’ve landed on some sites whose text is obviously AI generated. Lots of words but little informative value. Like it was written by a ninth grader trying to reach the 500 word minimum requirement.

Sites like…Google?

From a practical standpoint, some level of AI like ChatGTP is here to stay. But that does not mean that legislation cannot be be passed to enforce some kind of regulation. For example, one AI could be watching another AI and call 911 as soon as some keywords start to appear together to imply that someone is going to harm themselves (suicide) or others (school shoot out). But the tech companies are all sucking up to the MAGA and claiming that worrying about these things is WOKE, that any regulation is an infringement on their freedom of speech, and that the Europeans are undermining liberty because they have serious regulation in place. The level of malfeasance from those tech people is the same as the oil company with respect to global warming.

I don’t think this is true, very little of what’s happening right now is written in stone. but I’ll touch on why soon.

One thing that has made it so easy for this poison to get into our blood is that people, especially young people, have got so used to communicating with other, real people through computer screens. We stay in touch with friends and family through Facebook or group chats or just texting. We don’t see them, but we know that they are there on their screens talking to us.

The next step is communicating with non-people through screens. We all have to do it on insurance company websites – and soon, on Social Security and other government websites – because that’s the only way we can get answers. And they invite our feedback. I actually once told a chatbot that it had been very helpful ! (and it was, more helpful than an actual person, sadly). And this leads to the next step in communicating- using them as advisors, even friends, telling them our thoughts and problems. So much easier than going out and seeing people face to face.

In the original Bladerunner from the ‘80’s, the cyborgs were in attractive, human, form, intended for comfort and help (until they weren’t, and had to be put down). They looked human, which for a pre-internet human made them relatable. Now they don’t need to look real, or to “look” like anything at all. In the Bladerunner sequel a few years ago, the comfort bot was just a disembodied voice. We’ve created dangerous mirrors (leaded mirrors?) that give us back distorted reflections of ourselves.

Apologies for OT but I can’t resist.

Stephen Miller going for the Kojak look. All he needs is a lollipop and a conscience. Who loves ya, baby?

https://bsky.app/profile/atrupar.com/post/3ly4fizo6dc2b

heh. More like Kojak on a starvation diet, amirite?

More like the Kolchak look, or whatever Darren McGavin was chasing in any episode.

If so, this might just be the ghoul episode.

Mr. He-Man Tough Guy gets let out of the office for a walk–off leash!

And while Susie Wiles does not *appear* to be present, that cloak of invisibility was her third wish from the Magic Genie after the election. Ask Savage Librarian, who knows all about Susie Wiles.

SL may not share my own conviction that America is now governed by the team of Miller & Wiles. That is purely my own theory. As with any Trump crew, the question is how soon they get pitted against each other and the last man standing is–UGH–Russ Vought,

I think you are spot on with one adjustment. Trump seems to operate/function on a variation of the LIFO – last in, first out – inventory accounting process. Trump’s variation is LTIFTO or “last thought in, first thought out”. He seems devoid of any original thought capacity and more like a ventriloquist’s dummy. Since Wiles and Miller, and probably Vought and Lutnick, are in his ear the most they would have the most “last thoughts in” throughout his day and thus their influence. I imagine Wiles shudders every time he is in someone else’s speaking range that she can’t control.

The LTIFTO is a function of his dementia. Trump’s memory is going and getting worse daily. He can only remember the very last thing, and when it begins to slip even as he is speaking about it, he confabulates other material to backfill the lack of memory. He’s been able to fake his increasing loss of memory but it’s now moving beyond his ability to recall things even 5-10 minutes ago.

My mother is doing this and it’s horrible. But it’s worse to see that miserable racist misogynist felon doing it while sitting in the White House.

Trump, as was said of the Kaiser, is as tender as a pillow, and bears the imprint of the last asshole who sat on him.

I’m not sure where I land on this latest installment. To my mind, it’s tone isn’t one of caution but heading towards “fear mongering”. Maybe that’s just me.

The take on Nadella and Microsoft seems intentionally misleading. No one is proposing “personhood”, least of all him.

The comparison with lead might be apt. As you point out, lead is extremely useful and, yet, insipidly dangerous. The Romans supposedly knew the risks, but the benefit and convenience was too much to let it go. But I’d say it is premature.

I wrote a lot about LLMs in my last comment on your article, trying to separate out sentient and intelligence ( https://www.emptywheel.net/2025/06/23/what-we-talk-about-when-we-talk-about-ai-part-three/#comment-1101449 ) but don’t want to repeat that here. Some folk are definitely think they are rushing towards the realization of AGI. I’m skeptical for the reasons discussed before.

Which is not to say there are not dangers. The examples you point out the beginning are pretty clear. But the danger that worries me most isn’t that, nor is it some super intelligent AI getting loose and running rampant (which seems silly because it presupposes a bunch of abilities we have yet to observe and don’t know how to program). The danger that worries me most is who OWNS these things. It’s great that google let’s me use Gemini for free, or $20/month or whatever. Cool. But what if they took that away? I cannot fire up a hundred thousand nodes in my basement and start training my own LLM, that’s not happening. No one person or company owns the internet, though I guess maybe the players that control aren’t exactly innumerable, but these AIs are only run by a handful of companies. If they do break through and create something that’s truly super intelligent – even if it is nothing more than a chat bot – that could be incredibly powerful and disruptive and might be the last nail in the coffin of democracy.

Chatbots aren’t intelligent in any way. They’re LLMs and nothing more. We should be getting opt-in for access, not opt-out if you can find instructions.

well, you kind of can train your own LLM, fairly cheaply. I know a few people playing with their own instances of Deepseek, training on datasets they want to train on, etc.. And people have trained pretty toxic LLMs just to see how it goes. Badly, it goes badly.

I do think there’s evidence of something to fear here; people who are forming relationships with LLMs are in danger. That’s not most of us, but the vulnerable still matter, and are worthy of protection. That’s the basic contention that underlies regulation in general.

“playing with their own instances of Deepseek, training on datasets they want to train on”

This is not the same. As the author of a series of articles on AI you should know this.

LLM’s are very asymmetrical. One side of is the training of a model, and the other side is the running of the model which is called “inference”. For these super giant modern LLMs the training side requires an ENORMOUS amount of compute resource and time. This is what the giant datacenters are for, this is where the power consumption happens. That process produces a series of models which are capable of being run to perform inference. These models vary in size, some can only be run on extremely powerful machines/clusters. But a common target is to reduce one down to something that can be run on a single node. Nearly all the big LLM makers release models for people to use directly. So when your friends are using Deepseek, they are using a model that was the result of some millions of compute year operations and it is capable of adding more data to its learning set – this is in something called the “context window” and the amount of compute that can be dedicated to it is important too.

So, yes, we plebians can use the models that the big players deign to release to us. But if a model is NOT released (like Gemini’s latest) then we can only rent. Sort of like feudalism.

I suppose our disagreement is, at heart, about whether LLMs can be useful, or even useful enough to recoup their cost. I don’t think they can. I don’t think stocastic parrots will ever be able to be creative and coherent, as long as they’re based on vector math, stats, and little else.

>> I suppose our disagreement is, at heart, about whether LLMs can be useful, or even useful enough to recoup their cost. I don’t think they can. I don’t think stocastic parrots will ever be able to be creative and coherent, as long as they’re based on vector math, stats, and little else.

I cannot reply to your last message, for some reason, so I’m replying to your earlier one, up the chain.

I don’t believe we are disagreeing on that point. It is a good question about whether these big LLMs will be useful enough to recoup their costs. The LLMs where they are now are already VERY useful, but their current ability is not what this investment has been about. It is about significantly surpassing the current level. That’s what the money is after. And it’s an open question on whether or not they will achieve it. Right now, the bets are that exponential growth in compute expenditures will lead to linear growth in the measurable intelligence of these LLM. Exponential growth is a punishing curve, but it is do-able, just VERY expensive. But some in this field think the curve isn’t exponential but factorial. If it is factorial then that would likely mean that they will top out about where they are now. To make another doubling would likely require more energy and compute than can be done on this planet. BUT it is too soon to tell. I, myself, don’t know and am not placing bets.

Your second sentence about “stochastic parrots” seems to belie your own opinions and disbeliefs, rather than real understanding what is actually happening. And some of the other comments you’ve made make me worry that you aren’t really very well informed on this subject. Yet you are writing a multi part article on it. Think on this for a moment: what do LLMs and vaccines have in common? The answer is that they are technically complex subjects about which many very poorly informed people have knee jerk reactions and are influencing public policy with opinions that aren’t grounded in science. Now, admittedly, the stakes are a lot higher with vaccines.

Your personal disbeliefs aside, many are finding these new tools to be very powerful. And the utility, the leverage, has gone dramatically up in the last 24 months. Gemini today and Bard on it’s release just two years ago are day and night. And this is true for the others as well. You have friends using Deepseek to train on datasets, they aren’t wasting their time, they are doing this because it has real value to them. You might want to speak with them and get a better understanding of what that value is and a better understanding of how quickly it has grown, and then try to project that value curve forward.

Additional fascinating reading from a law/legal perspective: https://www.yalelawjournal.org/forum/the-ethics-and-challenges-of-legal-personhood-for-ai

An internet search “personhood AND (artificial intelligence)” yields many other articles. The one I read (link above) was mind provoking and exhaustive enough for now.

It may be reassuring to claim that they do not have “intelligence” or human like capabilities, but that view is far too simplistic.

Thanks for the link to this article and the one in your previous comment, Lit_eray. Very informative.

Thanks indeed. The author (whom I was unaware of previously) addresses her impossibly complex topic(s) in prose that attains an improbable clarity, partly by virtue of her structuring of the piece–but also due to what seems a deeply informed command of the material fused with the desire to make that material accessible to non-experts.

I especially appreciate how her weaving in anecdotes about AI models seemingly crossing the “sentience” barrier served to raise (my) goosebumps, while seamlessly illustrating the legal issues.

It’s a bit too Roko’s Basilisk for me. I’m happy to leave the AI models off the registry of beings until we at least figure out how to give humans rights. But we’re definitely heading in the direction of LLMs having more privileges than people, based on financing and resource use.

Thanks for the reference to “Roko’s Basilisk”. I had to look it up.

The thought occurs as to whether constructing a legal framework for LLMs contributes to the Basilisk, or doing nothing contributes.

You bring up what I call “The Next Step Problem”. It will be with us as long as homo sapiens last. We can next provide universal food and nutrition; warmth and shelter for all; figure out how many of us should exist; codify rights and relations; and etc.

This is somewhat off topic but if found it interesting that you use lead as an analogy given Trump’s recent obsession with crime and the media’s unwillingness to report on its steady decline since the 90s as well as the large body of evidence linking that decline to the reduction of lead in our environment as leaded gas was phased out.

Studies of ice core samples in Greenland clearly show that the Romans polluted their atmosphere with lead from their mining operations.

“ Lead Pollution Likely Caused Widespread IQ Declines in Ancient Rome, New Study Finds”

https://www.dri.edu/lead-pollution-likely-caused-widespread-iq-declinesin-ancient-rome/

There is a lot of evidence showing a strong link between lead exposure and crime in the US as well as effective ways to both prevent and treat lead exposure.

“ New evidence that lead exposure increases crime”

https://www.brookings.edu/articles/new-evidence-that-lead-exposure-increases-crime/

The late Kevin Drum covered this topic in depth both for Mother Jones and in his Jabberwocking blog. Our Chicken Little media is so addicted to hyping crime and phony threats like stranger danger and satanic that they won’t cover the fact that crime has been dropping for decades or the roll of lead reduction that decline. This media obsession has strengthened the far right and deeply damaged our democracy, giving Trump and excuse to put troops in our streets.

Every now and then, when my imagination takes flight, I envision myself in a Star Trek (TOS) episode in which I have a battle of wits with an evil LLM encased in a panel of flashing lights. Finally I hit it with a piece of circular logic, reducing it to crying out, “It does not compute!” as the lights go out…

Best Quinn Norton post yet! IMHO.

Have only commented once before, but read here daily. Thanks to everyone on this remarkable site. Episode 3 of the new season of South Park sends up AI to the nth degree in conjunction with microdosing. They’re on fire.