Why Would PRISM Providers Need to Pay Millions for New Certificates on Upstream Collection?

The Guardian has a story that rebuts the happy tales about quick compliance being told about the October 3, 2011 and subsequent FISA Court opinions. Rather than implementing a quick fix to the Constitutional violations John Bates identified, the government actually had to extend some of the certifications multiple times, resulting in millions of dollars of additional costs. It cites a newsletter detailing the extension.

The Guardian has a story that rebuts the happy tales about quick compliance being told about the October 3, 2011 and subsequent FISA Court opinions. Rather than implementing a quick fix to the Constitutional violations John Bates identified, the government actually had to extend some of the certifications multiple times, resulting in millions of dollars of additional costs. It cites a newsletter detailing the extension.

Last year’s problems resulted in multiple extensions in the Certifications’ expiration dates which cost millions of dollars for PRISM providers to implement each successive extension — costs covered by Special Source Operations.

The problem may have only affected Yahoo and Google, as an earlier newsletter — issued sometime before October 2 and October 6, 2011 — suggested they were the only ones that had not already been issued new (as opposed to extended) certificates. Moreover, Guardian’s queries suggested that Yahoo did need an extension, Facebook didn’t, and Google (and Microsoft) didn’t want to talk about it.

A Yahoo spokesperson said: “Federal law requires the US government to reimburse providers for costs incurred to respond to compulsory legal process imposed by the government. We have requested reimbursement consistent with this law.”

Asked about the reimbursement of costs relating to compliance with Fisa court certifications, Facebook responded by saying it had “never received any compensation in connection with responding to a government data request”.

Google did not answer any of the specific questions put to it, and provided only a general statement denying it had joined Prism or any other surveillance program. It added: “We await the US government’s response to our petition to publish more national security request data, which will show that our compliance with American national security laws falls far short of the wild claims still being made in the press today.”

Microsoft declined to give a response on the record.

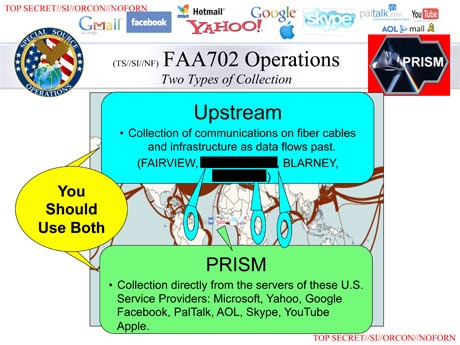

Here’s the larger question. PRISM is downstream collection, as the slide above makes clear, collection directly from a company’s servers. The problems addressed in the FISC opinion had to do with upstream collection.

We have always talked about upstream collection in terms of telecoms collecting data directly from switches.

But this all suggests that Google and Yahoo provide upstream data, as well.

I’ll have more to say about what this probably means in a follow-up. But for the moment, just consider that it suggests at least Google and Yahoo — both email providers — may be providing upstream data in addition to whatever downstream collection they turn over.

Update: See this post, in which I suggest that Google and Yahoo had problems not because of their own upstream collection (if either does any), but because certifications to them included targeting orders based on violated MCT collection that had to be purged out of the system.

Update: Softened verb in last sentence — perhaps they aren’t. But I suspect they are.

Update: Footnote 24 makes a pretty clear distinction between the upstream and PRISM collection.

In addition to its upstream collection, NSA acquires discrete Internet communications from Internet service providers such as [redacted] Aug. 16 Submission at 2; Aug. 30 Submission at 11; see also Sept. 7 2011 Hearing Tr. at 75-77. NSA refers to this non-upstream collection as its “PRISM collection.” Aug. 30 Submission at 11. The Court understands that NSA does not acquire Internet transactions” through its PRISM collection. See Aug Submission at 1.

I think Booz Allen and the rest of them are required to LIE when asked about revenues and profits from the “intelligence” community or when reporting financial results. It’s written into the SEC law.

Ich bin nicht Kim Dotcom. Because if I was him, I would be a terrorist. Then I could be investigated by the New Zealand Secret Police. They seem to be hooked into PRISM. PRISM seems to be international. New Zealand is one of the Five Eyes so they get to share PRISM. And terrorist Dotcom had to be stopped with a SWAT raid, before he terrorized again.

A couple of points.

First, Google is an ISP, not only because of things like Google Fiber in Kansas City, and free wireless in various places, but because the bought a lot of backbone fiber after the 2001 dot com crash when it was cheap and built their own network. See: https://peering.google.com/about/index.html They like to carry their own traffic as far as possible on their own network for a whole lot of good reasons. So, they’re both upstream and downstream. (This is another example of where the messy technical reality doesn’t match any of the simple descriptions we’ve seen.)

Second, as for the money, if I were the corporate accountant charged with setting NSA rates, I’d start with something like: It costs us a billion dollars to move internet traffic, and they’re looking at 10% of it, so they owe us $100 million and negotiate down from there. It’s not

as if the government can shame you for being a war profiteer without

revealing these program so it’s almost your fiduciary responsibility to

seek maximum compensation only loosely related to actual costs. Of

course, turning these programs into a profit center changes the

incentives for cooperation, so I’d love to know just who was paid and

how much and how that was accounted for in the balance sheets.

When you wrote “certificates” rather than “certifications” in the headline, it gave me a scary thought, maybe a bit far-out: could the NSA be forcing the companies to break their own SSL certificate chain of custody somehow? I’m not saying this is particularly likely, but I’ll explore the idea for a minute.

If part of the order is for the the government to get copies of or access to the private keys that the companies use to verify themselves to users of their sites during sessions, it might explain a few things. Fair warning: I’m really not an expert in this area at all.

If you have these keys and access to the routing of a user’s traffic, you can man-in-the-middle any or all secure sessions. This is similar what Iran did when it compromised a certificate authority in the Netherlands. Iranian users typed in “gmail.com”. The government then diverted their traffic to its own machine. That machine told the users that they had reached Gmail, and the users’ browsers trusted this because there was a certificate that accompanied the message that came from this trusted Dutch authority verifying that this really is Google’s certificate. Next, the government machine simply passed the traffic on to the real Google and back again to the users, transparently. So the user sees a secure session to Google, and Google sees a secure session to the user, but the traffic is getting read in between.

Now, this trick can be detected by tools like Convergence that check whether people in different parts of the world are seeing the same certificate. But people will often click through a warning even if the certificate isn’t trusted. And if it is, you won’t get a warning, because browsers work off of a list of trusted authorities, not a network of checks and balances. Wired reported in 2010 on at least one company that was marketing forged-certificate man-in-the-middle boxes to federal law enforcement:

http://www.wired.com/threatlevel/2010/03/packet-forensics/

And if you have the *real* private key to the real certificate, I presume it becomes much easier still to fool the parties on either end of a session. Somebody who knows more could tell whether anything could look different.

So what could this explain?

1. Compliance costs and time during program interrupt/restart. Serious certificate authorities and users guard private key data like it’s the Crown Jewels (except important):

http://arstechnica.com/security/2012/11/inside-symantecs-ssl-certificate-vault/

Depending on how the companies under order deal with their certificates, it could be difficult for them, especially if only a few people are allowed to know, to extract raw key information out of the system and hand it over. I checked the certificate info for a few sites by visiting. It looks like Gmail expires its certs out every 10 weeks-ish and replaces them. Yahoo Mail’s certificate was issued in March 2012 and is valid for two years. If the companies were on unshared keys during the hiatus, and upon program restart NSA had to wait for a Google rollover, and Yahoo had to arrange a visit to DigiCert’s vault in Lehi, Utah, that could explain the time lags.

As for monetary costs, I can imagine a few scenarios where un-breaking and then re-breaking certificate key custody could be expensive and difficult. It could involve changing physical boxes, making special arrangements for custom equipment with your certificate authority, ending them, and then re-making them, and maybe switching out routers with back doors. Big companies like Google keep caches of frequently-accessed data all over the place close to users.

2. “Never heard of Prism.” Google etc. keep saying that they aren’t giving the government access to their databases. And Google swears that if you really know how many user accounts had an NSL on them, you’ll be very relieved. But the NSA documents imply that you can read pretty much any email from Fort Meade. How to explain the discrepancy? Since the server-to-server transmission of emails is being done by SSL (encrypted on the wire), we all assumed that the access must be in the downstream phase, and Google is just being least untruthful. But if the government has forced Google to let that SSL traffic be man-in-the-middled, NSA can then instantly store the email plaintexts and doesn’t have to issue an NSL unless they want to get something that is old and had already been purged on the NSA side to clear space. So Google can claim not to have provided any bulk user account data or server access and just be full of shit rather than be “lying”.

3. Lavabit. We assumed the NSA was demanding some kind of physical or root access to Levison’s machines to extract private keys and then search through his databases at will or via NSLs. Or maybe they wanted to force him to collect the keys out of his own RAM and keep them for later or turn them over. That sounds awfully complicated and expensive and/or depends quite a lot on active cooperation by an unwilling party. What if the order was simpler: hand over your private SSL cert key so that we can spoof you transparently to your users?

4. “No touching!” and encrypted communications: When the NSA says it isn’t “touching” traffic that is being filtered for its search terms and, failing to match any, not being dumped in a database, we should consider the implications. The natural conclusion is that telecoms run filtering switches and then divert matching traffic to an NSA box. And we’ve been reading recently about differences among telecoms in what to provide. But I’m not sure I remember seeing something definitive that said that the telecoms were actually running the hardware. The “touching” BS has stretched this far, so I don’t see why it wouldn’t stretch further to include an NSA man-in-the-middle box that reads SSL traffic rather than just plaintext.

5. Retention of encrypted domestic traffic. Clearly, it has always been bizarre that the minimization procedures would allow for the retention of encrypted traffic when in a situation where by definition would be illegal to retain the plaintext. And we’ve all been assuming that the traffic is retained because the NSA wants to read it if the day should come when they can actually brute force crack the cryptography.

But consider a scenario where the NSA is filtering traffic on a particular cable, including lots of SSL traffic for which they have their own copy of the SSL certificates from major providers. So there you are, collecting plaintext traffic and “incidentally” sweeping up domestic traffic, which minimization requires you to discard (unless it matches something foreign-related as it passes through? Then you can keep it. Which means there’s no real domestic protection, of course). Along comes a block of encrypted traffic that’s being passed between two domestic locations. You can’t search it for any terms without decrypting it, of course. So the “pre-touching” screen system doesn’t work – you want heavy petting, and you have your own certificate key to the service that the traffic is destined for.

So you want to divert this SSL traffic through a man-in-the-middle process, and you want to store it if it’s interesting (or you want to store it anyway because that’s what you love to do). But legally you’re screwed, since you know the communication is domestic, and the minimization procedures require you to destroy it “upon recognition”, with an affirmative standard for any further retention or processing (you have to “reasonably believe” that it contains foreign intelligence information or evidence of a crime, which you can’t do if it’s encrypted). Just maybe, the court might let you man-in-the-middle this traffic anyway, but maybe not: you can’t let the telecom control something as sensitive as these private certificate keys, so it has to be your box, and that means you’re searching domestic data content rather than just having the telecom search it and give it to you. And there might also be a “transmitting” problem if you’re creating a new stream of the encrypted message to send to the other side.

So here’s what you do. You establish an exception for encrypted data, and you tell everybody that it’s about cryptanalysis: after all you’re the original cryptanalytic agency, and you convince your meager and tech-challenged overseers that you need to be able to keep some raw encrypted data from wherever in order to get better at codebreaking. Then, in practice you use the exception to enable you to treat SSL traffic at least as intrusively, and perhaps even much more intrusively, than plaintext traffic. You intercept the traffic in your box and set to work telling the user that you’re Gmail and telling Gmail that you’re the user. Now you have two options. You can run search terms on the plaintext just like before and then “touch” the interesting stuff into your database.

OR you can do something even more wily that ends up giving SSL traffic less protection than plaintext. You just keep the whole original encrypted message, which isn’t subject to minimization, and you also keep in your database a copy of the cryptographic session information that you used for the secure connections to the user and the email service. (I’ve been using a user-transmitted-to-service email here, but of course it could be anything, including a page view of an email inbox or two email services talking to each other over SSL). Then, the way it becomes “useful in cryptanalysis” is that it gets unlocked and searched whenever an analyst runs a database query that might include it (it’s like leaving the keys on the driver’s mirror). This sounds very processing intensive, but (1) the NSA has a lot of cores and could spread the blocks of each communication around many of them to search in parallel, and (2) if the metadata (originally encrypted or not) is always fair game, then it’s really easy to index.

6. “Transactions” dispute. As Wittes and Bateman Note ( http://www.lawfareblog.com/2013/08/the-nsa-documents-part-ii-the-october-2011-fisc-opinion/ ), one big concern of Bates’ was that communications that are not selectable were getting swept up with ones that were, and they were being stored together in single chunks. With downstream collection, this doesn’t make sense, since you would think you could search through each email individually. And emails on the wire that are being sent between services also come as single units, as far as I know.

So there are two possibilities. One of them is that Google and Yahoo and such were storing multiple emails together just because of the way their databases are formatted. So this really was about downstream collection, or some kind of artificial upstream collection where the services have to send the data to themselves so that it’s on the wire. And the compliance costs are best explained in this scenario: they came about when the court decided that the emails would actually have to be stored differently so that the NSA’s searches could pull targets with more precision rather than reading a bunch of blocks off a hard-drive.

The other is that the NSA was, as described above, breaking the SSL certificates and capturing the traffic of users as they navigated their inboxes in their web browsers. In that kind of scenario, it’s pretty easy to see how hard it would be to break the communications into discrete chunks that actually correspond to individual emails. People are clicking around and seeing related emails, forwards, etc. And since Google isn’t actually actively helping the process, it’s all on the NSA to reconstruct it into a rational order to define what could be targeted for future plaintext search, and they weren’t bothering to do so well. Is it possible that under a reformed process, there were compliance costs where the email providers were told to send page load information differently over the wire to make it easier?

7. In late 2012, Google began refusing to retrieve pop3 mail from third-party servers if those servers used a self-signed SSL certificate rather than one granted by a trusted certificate authority.

http://www.tomsguide.com/us/Gmail-SSL-POP3-Certificate-Self-Signed,news-16468.html

This is probably completely unrelated, but since this is a big speculative jaunt, I thought I’d throw it in there. Basically, if you have email with another service and want Gmail to bring it into a Gmail inbox for you, the third-party service can’t be your own anonymous server or a small provider that doesn’t pay for a trusted certificate and verify itself to a major authority. (Or you can do it, but Gmail won’t encrypt the exchange on the wire). For the sake of argument, say an adversary knew the NSA’s rules and wanted to play like this: get an email onto your own server, then securely transmit to Gmail, then e-mail some other Gmail user, so the mail would just sit in Google and maybe not go on the wire at all. There’s no way to man-in-the-middle this POP3 retrieval because the SSL certificate of the server is private, and it’s Gmail that is the “user”. Under the new policy, you can’t do this. If you want to get the email into Gmail’s system through retrieval from another box, it has to start out at a reputable email service provider or else go over the wire in plaintext.

There is a decent security explanation for this: Gmail wants to idiot-proof the service for you so that you can’t get man-in-the-middled by an adversary that has compromised your dinky server or email service. But still.

@Adam Colligan: Ah, I did fuck up the title.

But it was serendipitous, because that is exactly where I think this goes.

Here’s how the IC described this (you can listen along here):

Why would you use EMAIL as an example of MCTs? And given that Yahoo, at least, appears to be clearly one of the problem children, it does seem relevant.

So I’m not sure how they did it. But if you look at that PRISM slide, they say upstream counts as fiber cables AND infrastructure. We’ve always assumed it was the telecom’s switches, but maybe not.

And if Google was participating in some automated “upstream” collection it could say it wasn’t part of PRISM, even though NSA refers to it as such.

Isn’t, (and I hope I’m following right here), but isn’t upstream collection by Google pretty well implied by “MCTs”?

On the database side, like PRISM, individual messages can be selected for and filtered out.

But across the wire, we get the top of our inbox sent as a whole. MCTs.

Government use of the word “screenshot” is misleading, I think. It’s not pixels, like a screenshot. It’s a set of packets in a transaction, which gets rendered on our screen. They could, technically, parse out individual email information. But they don’t. And the “screenshot” language makes the parsing out task seem harder than it actually is.

@Garrett: Say more.

Yes, if Google is involved in upstream, then it would be as you say. But couldn’t they get Google content at the telecom switch?

@emptywheel: Well, that statement makes sense in the context of any user who is browsing his or her inbox of plain old HTTP. So it’s possible for this entire debate, FISA case, etc to be had without mentioning any compromise of SSL or SSL certificates so long as people either don’t think about the difference between HTTP and HTTPS or just implicitly assume that the NSA is only talking about HTTP traffic and only gets at anything else using targeted NSLs downstream.

But note about webmail: Gmail has had https access turned on *by default* since January 2010 ( http://gmailblog.blogspot.com/2010/01/default-https-access-for-gmail.html ). How many people in the world could there be left who are browsing Gmail inboxes in plaintext? Hotmail started offering https in 2010 (not sure when/if it became default), and Yahoo started offering in 2013.

Unless the NSA had an as-yet-unpublished freakout about Gmail turning https on by default in 2010, it appears they didn’t care. So either 1. they don’t want to collect it all (ha!); 2. they’re doing bulk downstream collection and search (Google’s strong denials are very craftily worded); 3. they broke the SSL via some zero-day exploit (seems pretty hard even for them; 4. they’re compelling the cert keys and man-in-the-middling (the FISA court is even crazier than we thought); or 5. we’re missing something.

@Adam Colligan:

“And if you have the *real* private key to the real certificate, I presume it becomes much easier still to fool the parties on either end of a session. Somebody who knows more could tell whether anything could look different.” Kim Dotcom mentioned that his game ping times rose dramatically when he was being watched (had to take a more circuitous route past an interception device). Anyway, in the case of a large site, SSL key material is generally treated seriously so a forged cert will *probably* be for a different key than was originally signed, and the key fingerprint will change whenever the key changes. Many smaller site operators treat their SSL key material with a bit less care just so they don’t have to wake up at 3am and type passphrases everywhere when a server doesn’t come up, so it’s possible that their original key and/or signing request could have been stolen.

4. “No touching” — as suggested by the PRISM collection flowchart, the providers connect to FBI standard intercept equipment already installed at selected hot spots so FBI can grab what they want and keep their taskings secret. NSA tasks and receives data from FBI, not the provider, et voilá, “no direct access”. See http://www.washingtonpost.com/wp-srv/special/politics/prism-collection-documents/images/prism-slide-7.jpg

6. “And emails on the wire that are being sent between services also come as single units, as far as I know.” Sort of. Most modern SMTP servers will attempt to bundle and send all mail destined for a particular mail exchanger in a single SMTP/TCP session, but each distinct message is a distinct DATA block within the session. The whole session may be encrypted (e.g. STARTTLS), effectively sealing the bundle together as far as a packet capture can see.

@emptywheel:

No, you’re right. At the telecom switch, or just upstream of Google, would be the same.

Some cell phone traffic could be MCTs as well. When you come on line, you’d get a communication to your phone, that you have three new voicemail messages and two new text messages or such. Depending on how they implement it, but I’d suspect they send the new information as one transaction.

How does VOIP figure in here?

@emptywheel: Wikipedia’s entry on VOIP security really needs to be updated, but this part may answer your question:

“Many consumer VoIP solutions do not support encryption of the signaling path or the media, however securing a VoIP phone is conceptually easier to implement than on traditional telephone circuits. A result of the lack of encryption is a relative easy to eavesdrop on VoIP calls when access to the data network is possible.[36] Free open-source solutions, such as Wireshark, facilitate capturing VoIP conversations.”

http://en.wikipedia.org/wiki/VoIP

just as a side note, when Microsoft bought out Skype, it was

mentionedrumored they put in backdoors that act similar to the pen registers present on the hardline telephone systems.@Jonathan:

Shamir was overtly talking about cyber-crime (I think) at the recent RSA Conference:

http://threatpost.com/rsa-conference-2013-experts-say-its-time-prepare-post-crypto-world-022613/77565

““It’s very hard to use cryptography effectively if you assume an APT is watching everything on a system,” Shamir said. “We need to think about security in a post-cryptography world.”

One way to help shore up defenses would be to improve–or replace–the existing certificate authority infrastructure, the panelists said. The recent spate of attacks on CAs such as Comodo, DigiNotar and others has shown the inherent weaknesses in that system and there needs to be some serious work done on what can be done to fix it, they said.

“We need a PKI where people can specify who they want to trust, and we don’t have that,” said Rivest, another of the co-authors of the RSA algorithm. “We really need a PKI that not only is flexible in the sense that the relying party specifies what they trust but also in the sense of being able to tolerate failures, or perhaps government-mandated failures. We still have a very fragile and pollyanna-ish approach to PKI. We need to have a more robust outlook on that.””

APT = Advanced Persistent Threat

But that could also be one of your own government agencies.

So maybe he and his fellow panelists were talking around the elephant in the room.

I think “Can some element of the US government compel the production of the master keys for a major telecommunications company?” is a fascinating question.

jesus – this like watching a horror movie.

there really can be no solution to the nsa “constitutional problem” except complete dissolution of the nsa into separate parts.

what i am beginning to glean from all this reporting and commentary, is that there is no government authority in charge of this spy-and-lie government agency, the nsa.

there is no president, no congressional committee, no court public or secret, no national security council, no director of national intelligence that has the knowledge to control this organization. in fact, i think it is reasonable to assert that this social and electro-mechanical spying machine is the leading efge of a problem humans will have to deal with for decades into the future – technological capacity thst is very sophisticated but poorly understood by its authorized govt overseers.

i doubt sen feinstein or congr rogers or most of their committees understand even a fraction of the technology and its implications. this goes as well for prez, vice-prez, district judges, appelate judges and supreme vlcourt judges.

we are in a new age of the technologically feasible, but poorly understood technological monster.

there’s another dimension to this massive syphoning of communications data by the u.s. national security administration.

nature vol 497 2 may 2013:

dirk helbing

“globally networked risks and how to respond”.

pp. 51-59.

@Saul Tannenbaum: Isn’t that the equivalent (understanding that it doesn’t have master keys) of what we think it asked Lavabit to do?

@emptywheel: I think that’s the core of the question. Production of passwords and keys can be compelled by supboena, but that’s generally been understood to be in the context of, say, the password decrypt an individual’s hard disk. They can make you, for example, open a safe and “opening” a disk is considered the same thing. So, if we were exchanging encrypted email, we could be compelled to surrender our keys so the conversation, after the fact, can be decrypted.

When it comes to Lavabit, I’m a bit puzzled by why it’s considered so secure. The best outline of the service I can find (

http://highscalability.com/blog/2013/8/13/in-memoriam-lavabit-architecture-creating-a-scalable-email-s.html) leads one to think that, over the wire, it’s no more or less secure than Gmail or Yahoo, and that its reputation for security comes from its encryption of mail messages on disk. Lavabit retains your private key, password protected, which it requires to encyrpt/decrypt messages. Compelling Lavabit to turn over the messages and the private key is certainly within the realm of settled law. Breaking the password protection of the private key is in the wheelhouse of the NSA. (The security design of Lavabit strikes me as naive, and Levison’s reaction sounds to me like a guy who just realized that.)

For Google’s master keys, lets shift analogies. The government can compel a bank to open a safe deposit box. But can it compel the bank to turn over a (hypothetical) master key that would open all the safe deposit boxes and be allowed in to do whatever it wants, subject to minimization procedures?

I don’t know the answer, but it’s qualitatively different than what’s required to read mail from Lavabit, and I wouldn’t trust the FISA court to say no. Up until the last few days, I would have considered anyone asking this question to be ready for a tin foil hat. Now I think it’s a question worth asking. I can’t imagine getting an answer. If our government has these keys, that’s as closely held a secret as, say, the nuclear launch codes. Or, at least, I would hope it is.