The Future of Work Part 2: The View From the White House

Top advisors in the Obama Administration published a report titled Artificial Intelligence, Automation, and the Economy in December 2016, which I will call the AI Paper. It’s a statement of the views of the Council of Economic Advisers, the Domestic Policy Council, the Office of Science and Technology Policy, the National Economic Council, and the US Chief Technology Officer, combining their views into a single report. There is a brief Executive Summary which gives a decent overview of the substance of the report, followed by a section on the economics of artificial intelligence technology and a set of policy recommendations. It’s about what you’d expect from a committee, weak wording and plenty of caveats, but there are nuggets worth thinking about.

First, it would be nice to have a definition of artificial intelligence. There isn’t one in this report, but it references an earlier report; Preparing For the Future of Artificial Intelligence, which dances around the issue in several paragraphs. Most of the definitions are operational: they describe the way a particular type of AI might work. But these are all different, just as neural network machine learning is different from rules-based expert systems. So we wind up with this:

This diversity of AI problems and solutions, and the foundation of AI in human evaluation of the performance and accuracy of algorithms, makes it difficult to clearly define a bright-line distinction between what constitutes AI and what does not. For example, many techniques used to analyze large volumes of data were developed by AI researchers and are now identified as “Big Data” algorithms and systems. In some cases, opinion may shift, meaning that a problem is considered as requiring AI before it has been solved, but once a solution is well known it is considered routine data processing. Although the boundaries of AI can be uncertain and have tended to shift over time, what is important is that a core objective of AI research and applications over the years has been to automate or replicate intelligent behavior. P. 7.

That’s circular, of course. For the moment let’s use an example instead of a definition: machine translation from one language to another, as described in this New York Times Magazine article. The article sets up the problem of translation and the use of neural network machine learning to improve previous rule-based solutions. For more on neural network theory, see this online version of Deep Learning by Ian Goodfellow and Yoshua Bengio and Aaron Courville. H/T Zach. The introduction may prove helpful in understanding the basics of the technology better than the NYT magazine article. It explains the origin of the term “neural network” and the reason for its replacement by the term “deep learning”. It also introduces the meat on the skeletal metaphor of layers as used in the NYT magazine article.

The first section of theAI Paper takes up the economic impact of artificial intelligence. Generally it argues that to the extent it improves productivity it will have positive effects, because it decreases the need for human labor input for the same or higher levels of output. This kind of statement is an example of what Karl Polanyi calls labor as a fictitious commodity. The AI Paper tells us that productivity has dropped over the last decade. That’s because, they say, there has been a slowdown in capital investment, and a slowdown in technological change. Apparently to the writers, these are unconnected, but of course they are connected in several indirect ways. The writers argue that improvements in AI might help increase productivity, and thus enable workers to “negotiate for the benefits of their increased productivity, as discussed below.” P. 10.

The AI Paper then turns to a discussion of the history of technological change, beginning with the Industrial Revolution. We learn that it was good on average, but lousy for many who lost jobs. It was also lousy for those killed or maimed working at the new jobs and for those marginalized, wounded and killed by government and private armies for daring to demand fair treatment. These are presumably categorized as “market adjustments”, which, according to the AI Paper, “can prove difficult to navigate for many.” P. 12 Recent economic papers show that Wages for those affected by these market adjustments never recover, and we can blame the workers for that: “These results suggest that for many displaced workers there appears to be a deterioration in their ability either to match their current skills to, or retrain for, new, in-demand jobs.” Id.

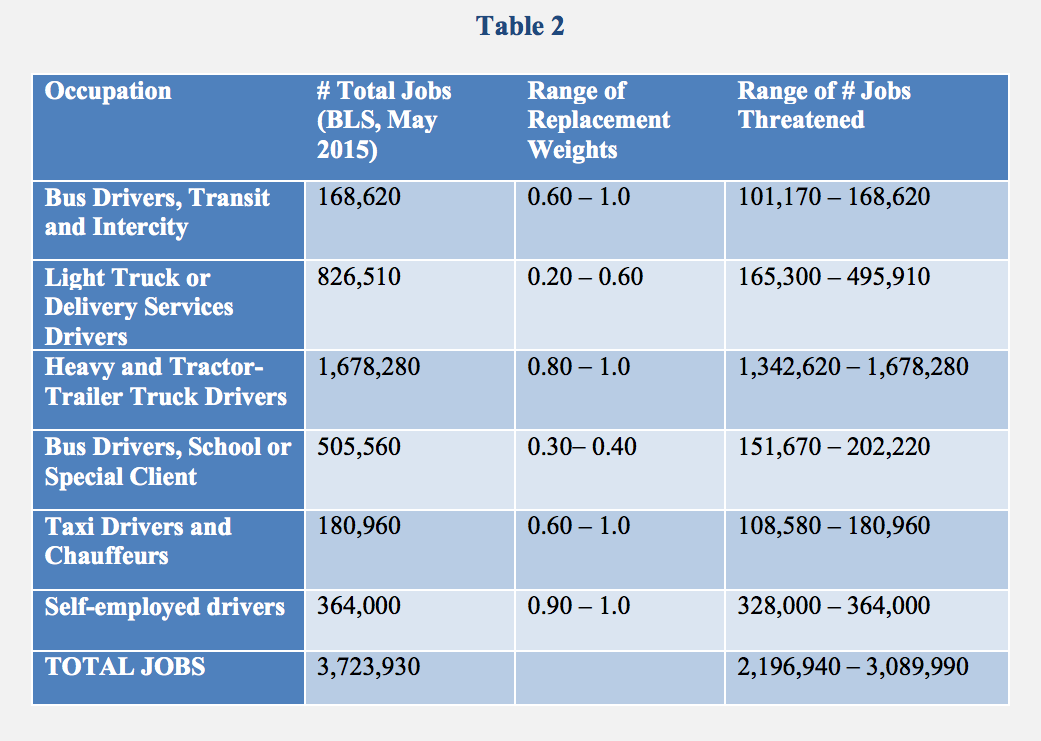

The AI Paper then takes up some of the possible results of improvements in AI technology. Job losses among the poorest paid employees are likely to be high, and wages for those still employed will be kept low by high unemployment. Jobs requiring less education are likely to be lost, while those requiring more education are likely safer, though certainly not absolutely safe. The main example is self-driving vehicles. Here’s their chart showing the potential for driving jobs that might be lost.

That doesn’t include any knock-on job losses, like reductions in hiring at roadside restaurants or dispatchers.

It also doesn’t include the possible new jobs that AI might create. These are described on pp 18-9. Some are in AI itself, though as the NYT magazine article shows, it doesn’t seem like there will be many. Some new jobs will be created because AI increases productivity of other workers. Some are in new fields related to handling AI and robots. That doesn’t sound like jobs for high school grads. Most of the jobs have to do with replacing infrastructure to make AI work. Here’s Dave Dayen’s description of the need to rebuild all streets and highways so autonomous vehicles can work. Maybe all those displaced 45 year old truck drivers can get a job painting stripes on the new roads. There are no numerical estimates of these new jobs.

The bad news is buried in Box 2, p. 20. Unless there are major policy changes, it’s likely that most of the wealth will be distributed to the rich. And then there’s this:

In theory, AI-driven automation might involve more than temporary disruptions in labor markets and drastically reduce the need for workers. If there is no need for extensive human labor in the production process, society as a whole may need to find an alternative approach to resource allocation other than compensation for labor, requiring a fundamental shift in the way economies are organized.

That certainly opens a new range of issues.

Update: the link to the AI Paper has been updated.

Many unknowns await the deployment of AI based products and services that are beginning to see usage in daily life. Facial recognition, voice recognition, advanced real-time perceptual reduction and response all seem routine these days. Cars drive themselves for crying out loud. This is new.

There are always issues with new-tech and AI holds the keys to an exceptional potential due to the power of inference processing. Like any new and powerful technology, it can be used for good or ill purposes.

Enhanced analytical procedures using machine learning (ML) and related technologies underlie applications offering “natural” user interfaces. Benefits afforded to low-information users by simplifying the experience of using a computer system are significant, as well as the possible unintended consequences. If the computer program can figure out what you want, much of the workload is shifted towards the vendor’s domain. At first blush, it sounds like an advantage for the user.

There are several dramatic implications of this developing trend. First, AI requires sufficient data points to make reasonable inferences. This leads to more and more data residing in the hands of unknowable authorities. Do people feel comfortable with this level of asymmetric knowledge and power? Trust is key.

As in all authority models, trustworthiness, or lack thereof is the real issue.

Human consciousness represents a reasoning model where decisions are taken from layers of knowledge, ostensibly collected over a lifetime. Conclusions reached by human thought stem from a labyrinth of perceptions, biases, notions, imperatives, rationalizations and impulses. Who gives us this capacity to observe and act on such varied stimulus? Well, I’m going out on a limb here, but it seems to have been a feature of biological structures whose continuity self-reinforced with the integration of quantum entanglement enabled cellular communications. That makes us trillion-cpu AI bots for comparison.

Once upon a time, computer programs were deterministic — simply running a series of commands which produced an expected result. Alternatively, AI is not so linear as to think what it is told. ML applications think in terms of “what fits” and what could be. Who knew that such programs could hallucinate with clarity?

What are the long term implications of machines that behave in contextual paradigms? Well, there is likely a loss of human hegemony of so called intelligent thought. Computers can see patterns the untrained eyes cannot. Hence, the emergence of a white hat/black hat dichotomy. Unfortunately, the hat color is in the eye of the beholder.

The bottom line for the moment is that machines do the bidding of their masters so there shouldn’t be an outbreak of rogue, self-generated autonomous terminators. They would be directed by a so-called authority.

It could prove challenging to outsmart the new tech with anything but newer tech, same as always. It is just that if we humans become the old tech in this context, that’s problematic. Should we ban AI? Ennh, I say learn how and why it works. Do the research. Read about recurrent neural networks. Learn about adaptive node finite element analysis. Learn how humans really think instead of just skimming ancient texts about idealized cognition.

We don’t have a clue how human intelligence functions quantitatively. Smarter machines may reveal this mystery as we embrace the tools to emulate and synthesize consciousness in inanimate objects. Someone may be tempted to confuse this with immortality. It’s not. It is just a clever new way to use electrons.

The universe as we know it is simply a home for electrons. We mightly benefit from their existence. Let’s use this technology to visit new worlds — both inside and outside the boundaries of physical limitation. The alternative is not compelling.

Perhaps if we want to define “artificial intelligence” then we should start with a definition of “intelligence” per se. One online dictionary says this:” the ability to learn or understand or to deal with new or trying situations : reason; also : the skilled use of reason (2) : the ability to apply knowledge to manipulate one’s environment or to think abstractly as measured by objective criteria (as tests)”.

That seems to be referring to human activity. So then “artificial intelligence” would simply be the same things except done by a machine, no? If so, are the following items examples of AI? A lawn sprinkler system that collects rain data and turns itself on only when needed. A thermostat that senses temperature and turns the furnace or A/C on when needed. A car that senses which key is inserted (Drive 1 or Driver 2) and adjusts itself accordingly. HAL in “2001, A Space Odyssey”? Etc.

I do believe that these are all “intelligent” things as defined above. But I sense that they are not the “AI” that all these authors are discussing—because these things don’t really take away existing jobs but rather add new fillips to our lives. My takeaway is that “AI” as being discussed these days is any use of machine intelligence that takes away jobs. (For another day: the discussion of AI that is to be feared for the reason that it controls our lives.)

Ed, many thanks.

I’m sending Gov. Walker some high level policy ideas, copied to reporters and editors at the Milwaukee Journal Sentinel. Your links and insights will figure prominently.