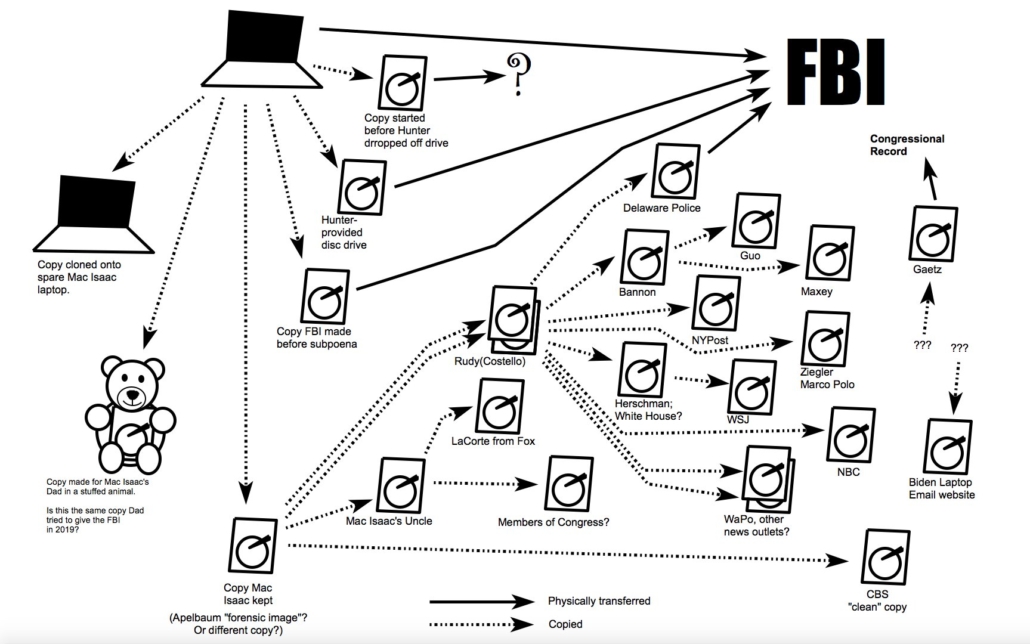

I’ve been wading through Hunter Biden data all weekend. There’s some evidence that the descriptions of the “Hunter Biden” “laptop” based on the drive Rudy Giuliani has peddled do not match the description of what should be on such devices given what the FBI and IRS saw.

Before I explain that, though, I want to talk about how the life of Hunter Biden’s iCloud account differs from what is portrayed in this analysis paid for by Washington Examiner.

As that report describes, Hunter Biden activated a MacBook Pro on October 21, 2018, then set it up with Hunter’s iCloud on October 22. Hunter then used the MacBook as his primary device until March 17, 2019, a month before it waltzed into John Paul Mac Isaac’s computer repair shop to start a second act as the biggest political hit job ever.

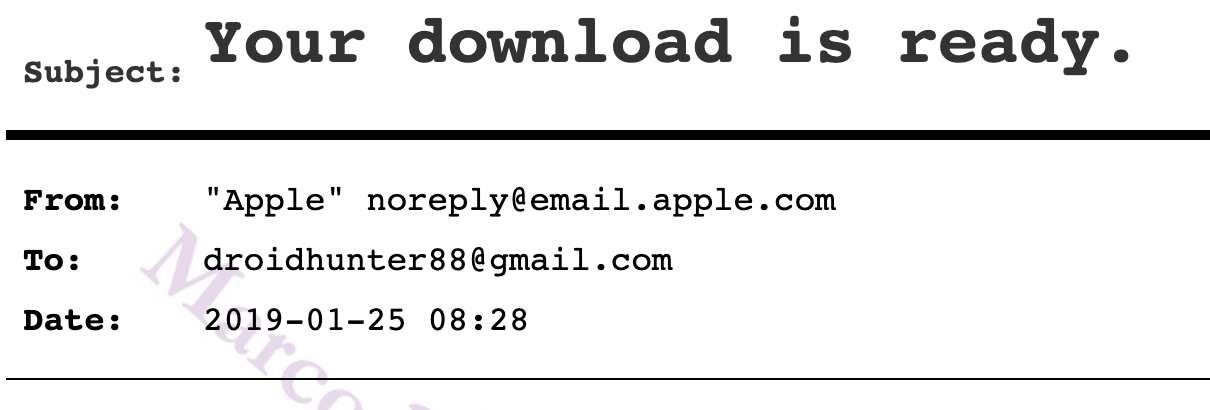

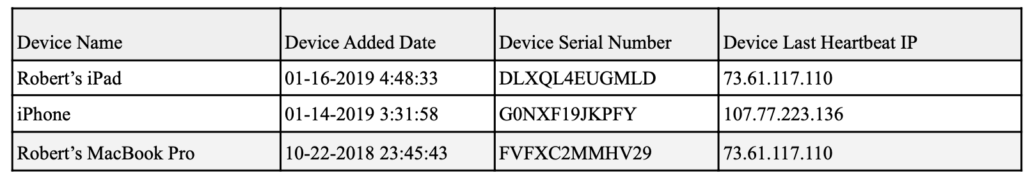

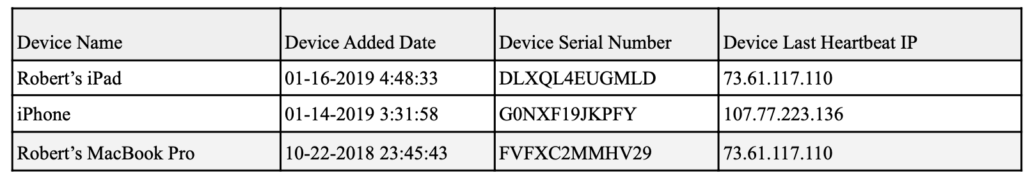

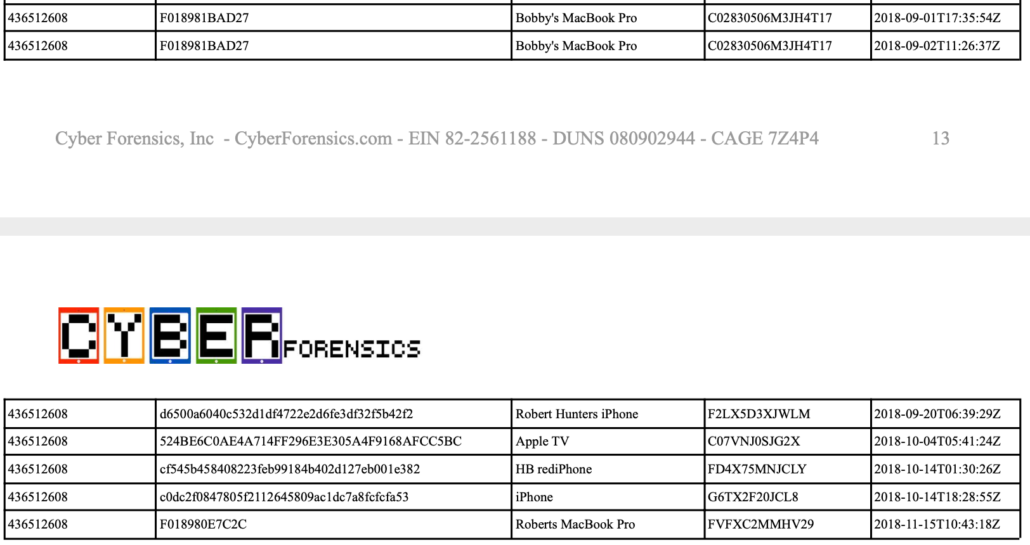

There are problems with that story. A longer table of the devices that logged into Hunter Biden’s iCloud includes devices that appear to have been accessing core Hunter Biden content.

That same table doesn’t show any access after November 15, 2018, with the last access being the device Roberts MacBook Pro that would end up in a Delaware repair shop, but showing up six days earlier than it should. There’s a phone that should but does not show up in those devices, too.

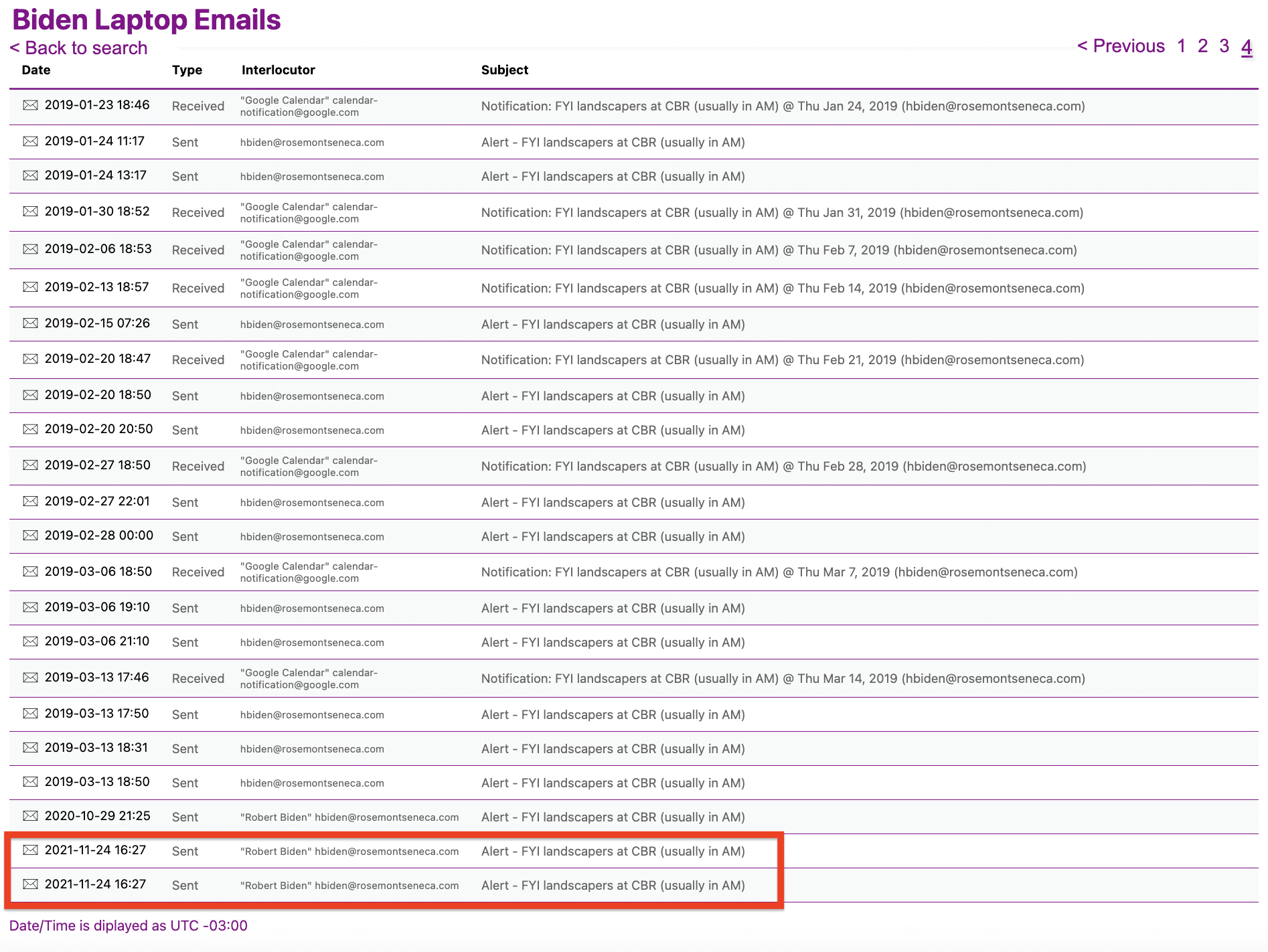

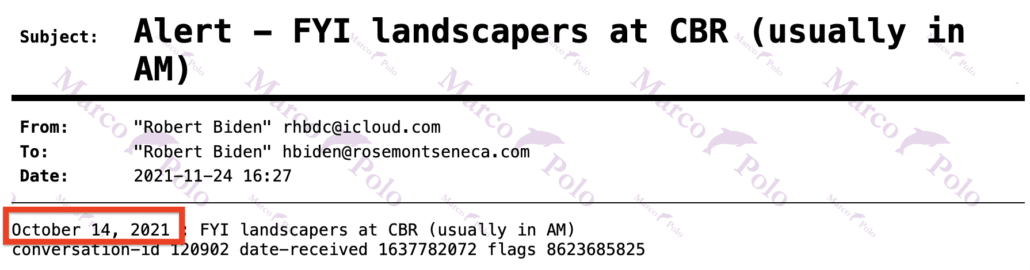

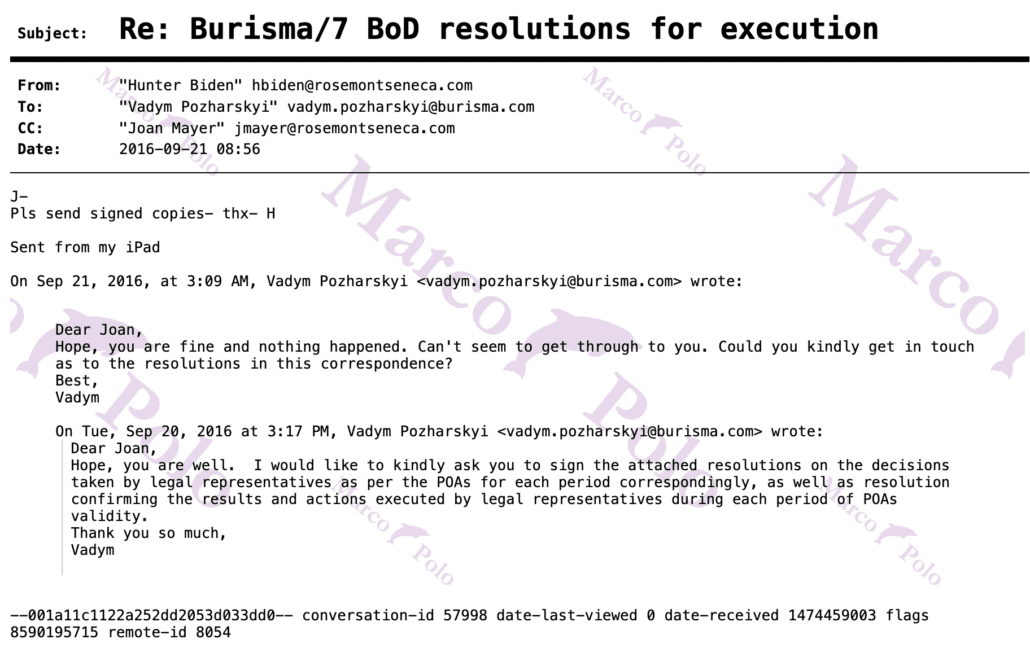

The report doesn’t discuss the import of the shifts between these emails.

RHB used several emails for business and personal use including:

○ [email protected] [sic]

○ [email protected] ([email protected])

○ [email protected]

○ [email protected]

○ [email protected]

One email missing from this list is a Gmail account under which a bunch of passwords were stored. That’ll become important later.

The most important email is the Gmail account (misspelled above), [email protected], which Hunter Biden used to contact sex workers, probably including the Russian escort service that the IRS used to predicate the IRS investigation. That email account got added to his iCloud account at the same time as his iCloud contents were requested, and then again before the MacBook stopped being used. Those changes often happened in conjunction with changes to the phone number.

For now, though, I just want to map out the major events with Hunter’s iCloud accounts from September 1, 2018 (perhaps the months before the IRS would open an investigation into him because he was frequenting a Russian escort service) until the final email as found on the laptop itself. There’s a bunch more — one after another credit card gets rejected, and he keeps moving his Wells Fargo card over to pay for his Apple account; the iCloud account shows Hunter reauthorizing use of biometrics to get into his Wells Fargo account in this period.

In January 2019, the Gmail account Hunter Biden used to contact sex workers (probably including the Russian escort service he had been using) effectively took over his iCloud account and asked for a complete copy of his iCloud account. Then, the next month, all the data on the Hunter Biden laptop was deleted.

Update: I’ve taken the reference to the HB RediPhone out altogether–it’s clear that’s a branded iPhone–and replaced it with a better explanation of the other devices.

Update: I see that he does have D[r]oidhunter88, but doesn’t discuss the import of it.

Update: I’ve added a few things that happened while Hunter’s account was pwned. Importantly, as part of this process an app called “Hunter” was given full access to his droidhunter88 gmail account. There are also a few emails that seem to be a test process.

Update: Added the missing Gmail account.

Hunter Biden’s iCloud

9/1/18: An account recovery request for your Apple ID ([email protected]) was made from the web near Los Angeles, CA on August 31, 2018 at 9:36:07 PM PDT. The contact phone number provided was [Hunter Biden’s].

9/1/18: The following changes to your Apple ID, [email protected] were made on September 1, 2018 at 10:29:36 AM PDT: Password

9/1/18: Your Apple ID ([email protected]) was used to sign in to iCloud on a MacBook Pro 13″.

Date and Time: September 1, 2018, 10:34 AM PDT

9/1/18: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser.

Date and Time: September 1, 2018, 10:42 AM PDT

9/2/18: Your Apple ID, [email protected], was just used to download Hide2Vault from the Mac App Store on a computer or device that has not previously been used.

9/2/18: Welcome to your new MacBook Pro with Touch Bar.

9/11/18: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser.

9/11/18: The password for your Apple ID ([email protected]) has been successfully reset.

9/11/18: Robert’s iPad is being erased. The erase of Robert’s iPad started at 2:56 PM PDT on August 5, 2018.

This is one of several times in several weeks that Hunter loses his iPhone, but while it’s lost, someone also pings his MacBook.

9/16/18: A sound was played on iPhone. A sound was played on iPhone at 8:25 PM PDT on September 15, 2018. (Repeats 25 times in 5 minutes)

9/16/18: A sound was played on Robert’s MacBook Pro at 8:30 PM PDT on September 15, 2018. (Repeats 2 times)

9/16/18: A sound was played on iPhone at 8:31 PM PDT on September 15, 2018. (Repeats 7 times)

9/16/18: iPhone was found near Santa Monica Mountains National Recreation Area 23287 Palm Canyon Ln Malibu, CA 90265 United States at 11:32 PM PDT.

9/16/18: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser.

9/19/18: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser.

9/20/18: Your Apple ID ([email protected]) was used to sign in to iCloud on an iPhone 8 Plus.

This is the second time he loses his phone. What follows is basically a chase of Hunter Biden’s iPhone across LA. It’s not clear it is ever recovered — but it is over two weeks before a new iPhone logs into his account.

9/27/18: Lost Mode enabled on Robert Hunter’s iPhone. This device was put into Lost Mode at 7:20 PM PDT on September 27, 2018.

9/27/18: Robert Hunter’s iPhone was found near [address redacted] Lynwood, CA 90262 United States at 7:20 PM PDT.

9/27/18: Your Apple ID ([email protected]) was used to sign in to iCloud on an iPhone 8 Plus.

9/27/18: A sound was played on Robert Hunter’s iPhone at 7:20 PM PDT on September 27, 2018.

9/27/18: A sound was played on Robert Hunter’s iPhone at 7:20 PM PDT on September 27, 2018.

9/27/18: Robert Hunter’s iPhone was found near [address redacted] Lynwood, CA 90262 United States at 7:20 PM PDT.

9/28/18: Robert Hunter’s iPhone was found near [different address redacted] Lynwood, CA 90262 United States at 4:24 PM PDT.

9/28/18: Robert Hunter’s iPhone was found near [third address redacted] Lynwood, CA 90262 United States at 5:27 PM PDT.

9/28/18: Robert Hunter’s iPhone was found near [fourth address redacted] Los Angeles, CA 90036 United States at 6:22 PM PDT.

9/28/18: Robert Hunter’s iPhone was found near [fifth address redacted] Los Angeles, CA 90069 United States at 6:38 PM PDT.

10/13/18: Bobby Hernandez to [email protected]: You left your phone. How do I get it to you?

10/14/18: The password for your Apple ID ([email protected]) has been successfully reset.

By date, this login is the HB rediPhone, but Apple recognized it as an iPhone X.

10/14/18: Your Apple ID ([email protected]) was used to sign in to iCloud on an iPhone X. Date and Time: October 14, 2018, 11:24 AM PDT

10/17/18: The password for your Apple ID ([email protected]) has been successfully reset.

10/17/18: The following information for your Apple ID (r•••••@rspdc.com) was updated on October 17, 2018. Trusted Phone Number Added – Phone number ending in 73

10/17/18: New sign-in to your linked account [email protected] Your Google Account was just signed in to from a new Apple iPhone device.

Per the Gus Dimitrelos report, the following activity reflects the creation of a new MacBook account called Robert’s MacBook Pro — the laptop that would end up in Mac Isaac’s shop. But there doesn’t appear to be an alert for a new device like there is the for the iPhone 8 Plus the following day.

10/21/18: Your Apple ID ([email protected]) was used to sign in to iCloud on a MacBook Pro 13″. Date and Time: October 21, 2018, 5:50 AM PDT

10/21/18: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser. Date and Time: October 21, 2018, 9:06 AM PDT

10/22/18: The following changes to your Apple ID, [email protected] were made on October 22, 2018 at 7:47:30 PM EDT: Phone number(s)

10/23/18: Your Apple ID, [email protected], was just used to download Quora from the App Store on a computer or device that has not previously been used.

10/23/18: Your Apple ID ([email protected]) was used to sign in to iCloud on an iPhone 8 Plus. Date and Time: October 23, 2018, 4:10 PM PDT

10/23/18: New sign-in to your linked account [email protected] Your Google Account was just signed in to from a new Apple iPhone device.

Several spyware apps get purchased in this period.

10/29/18: Your mSpy credentials to your control panel: Username/Login: [email protected]

11/2/18: Your Apple ID ([email protected]) was used to sign in to iCloud on an iPhone XS.

11/16/18: You recently added [email protected] as a new alternate email address for your Apple ID.

11/21/18: You’ve purchased the following subscription with a 1‑month free trial: Subscription Tile Premium

11/22/18: Your Apple ID, [email protected], was just used to download KAYAK Flights, Hotels & Cars from the iTunes Store on a computer or device that has not previously been used.

12/28/18: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser. Date and Time: December 28, 2018, 7:06 AM PST

1/3/19: Keith Ablow (then Hunter’s therapist) says Hunter’s email is screwed up

1/6/19: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser. Date and Time: January 6, 2019, 1:51 AM PST

1/12/19: Your Recent Mac Cleanup Pro Order [ADV181229-7742-90963]

1/14/19: The following changes to your Apple ID, [email protected] were made on January 13, 2019 at 10:28:31 PM EST: Phone number(s)

1/14/19: The following changes to your Apple ID, [email protected] were made on January 13, 2019 at 10:31:15 PM EST: Password

1/14/19 The following changes to your Apple ID, [email protected] were made on January 13, 2019 at 10:52:13 PM EST: Billing and/or Shipping Information

1/14/19: The following changes to your Apple ID, [email protected] were made on January 13, 2019 at 10:53:40 PM EST: Phone number(s)

1/14/19: The following changes to your Apple ID, [email protected] were made on January 13, 2019 at 11:12:45 PM EST: Billing Information

1/16/19: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser. Date and Time: January 16, 2019, 1:59 PM PST

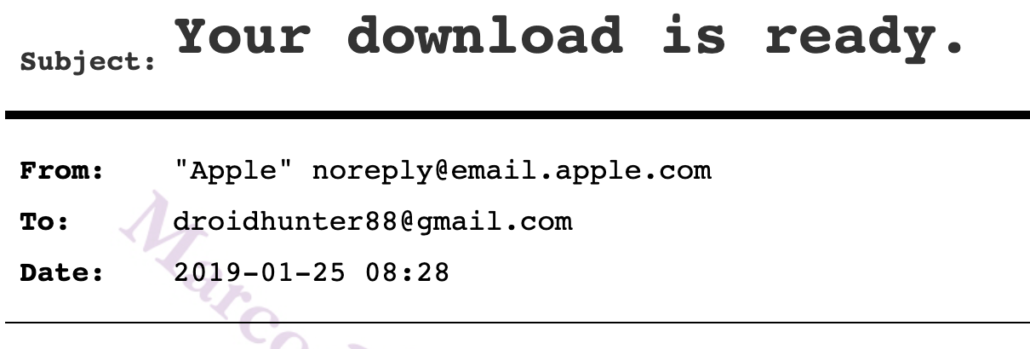

While Hunter is in Ketamine treatment at Keith Ablow’s, a service called “Hunter” gets access to the droidhunter88 gmail account

1/16/19: Here’s my first tip for you!

1/16/19: Hi Robinson, Hunter now has access to your Google Account [email protected].

Hunter can:

View your email messages and settings

Manage drafts and send emails

Send email on your behalf

A bunch of things happen in this four day period: first, someone accessed droidhunter88 from a new iPhone. Someone changed the phone number for the Hunter Biden iCloud. Then, droidhunter88 was given access to the iCloud account. Then the iCloud account ordered all of Hunter’s iCloud contents. Then the password for the account was reset.

1/17/19: New device signed in to [email protected] Your Google Account was just signed in to from a new Apple iPhone device.

1/17/19: I am here to help you find the emails you need!

Giovanni here from Hunter.

I wanted to quickly check if I can help you getting started with Hunter.

There are plenty of functionalities included with your free plan that will allow you to find, verify and enrich a set of data in bulk: these are all explained in our video guides.

However, if you already have a precise task to perform, reply to this email so I can better assist you!

1/17/19: n (from [email protected])

1/18/19: Long email to tabloid journalist sent under rosemontseneca email (this is sent first to Keith Ablow and then George Mesires, the latter of whom responds); this would have shown how the email account worked

1/19/19: The following information for your Apple ID (r•••••@rspdc.com) was updated on January 19, 2019. Trusted Phone Number Removed – Phone number ending in 13

1/20/19: The following changes to your Apple ID, [email protected] were made on January 20, 2019 at 5:24:54 PM EST: Phone number(s)

1/20/19: The following changes to your Apple ID, [email protected] were made on January 20, 2019 at 5:31:21 PM EST: Apple ID

Email address(es)

1/20/19: The following changes to your Apple ID, [email protected] were made on January 20, 2019 at 5:31:21 PM EST: Apple ID Email address(es)

1/20/19: A request for a copy of the data associated with the Apple ID [email protected] was made on January 20, 2019 at 5:40:26 PM EST

1/21/19: The password for your Apple ID ([email protected]) has been successfully reset.

1/21/19: The following changes to your Apple ID, [email protected] were made on January 21, 2019 at 8:28:05 AM EST: Name — changed from Robert Hunter to Robert Biden

1/21/19: You recently added [email protected] as the notification email address for your Apple ID

1/21/19: The following changes to your Apple ID, [email protected] were made on January 21, 2019 at 8:31:02 AM EST:

Rescue email address

1/22/19: The following information for your Apple ID (r•••••@icloud.com) was updated on January 22, 2019. Trusted Phone Number Removed – Phone number ending in 96

1/22/19: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser. Date and Time: January 22, 2019, 4:21 AM PST

1/22/19: The following changes to your Apple ID, [email protected] were made on January 22, 2019 at 10:05:20 AM EST:

Email address(es)

1/22/19: The following changes to your Apple ID, [email protected] were made on January 22, 2019 at 10:05:29 AM EST:

Email address(es)

1/22/19: The following changes to your Apple ID, [email protected] were made on January 22, 2019 at 10:05:34 AM EST:

Email address(es)

1/24/19: You recently added [email protected] as a new alternate email address for your Apple ID.

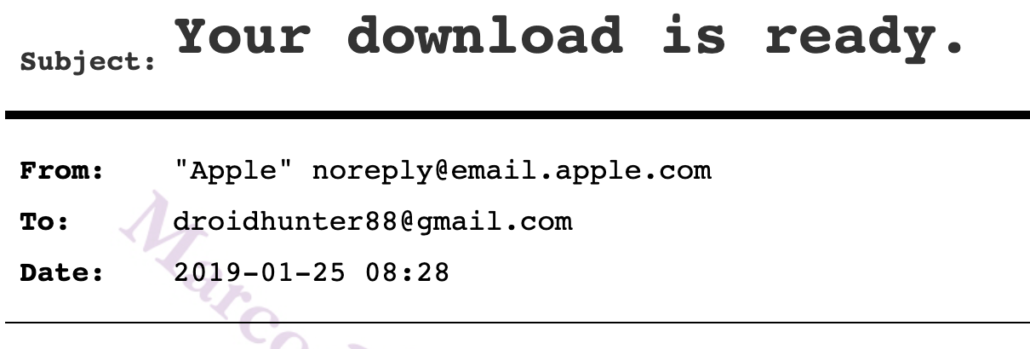

I think that after ordering all Hunter’s data, the account is reset to what it had been from the start. But Droidhunter88, not [email protected], gets the iCloud backup.

1/24/19: Your contacts have been restored successfully on January 24, 2019, 1:17 PM PST.

1/25/19: The data you requested on January 20, 2019 at 5:40:26 PM EST is ready to download. [Sent to both Droidhunter88 and [email protected]]

1/27/19: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser. Date and Time: January 27, 2019, 7:41 AM PST

Several photo editing apps are purchased in this period (and one CAD app).

1/27/19: You’ve purchased the following subscription with a 1‑month free trial: Subscription Polarr Photo Editor Yearly

2/6/19: The following changes to your Apple ID, [email protected] were made on February 5, 2019 at 11:39:09 PM EST: Phone number(s)

2/9/19: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser. Date and Time: February 9, 2019, 9:52 AM PST

2/9/19: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser. Date and Time: February 9, 2019, 5:08 PM PST

Hunter connected to your Google Account

Hi Robinson,

2/9/19: Hunter now has access to your Google Account [email protected].

2/9/19: test To:[email protected]

2/9/19: jkFrom:”Robinson Hunter” [email protected]:[email protected]

2/9/19: The following information for your Apple ID (r•••••@icloud.com) was updated on February 10, 2019. Trusted Phone Number Added – Phone number ending in 96

2/9/19: You recently added [email protected] as the notification email address for your Apple ID.

2/9/19: You recently added [email protected] as the notification email address for your Apple ID

2/9/19: The following changes to your Apple ID, [email protected] were made on February 9, 2019 at 8:33:57 PM EST: Rescue email address

2/9/19: Your Apple ID ([email protected]) was used to sign in to iCloud on an iPhone 6s. Date and Time: February 9, 2019, 6:11 PM PST

2/10/19: Your Apple ID, [email protected], was just used to download Call recorder for iphone from the iTunes Store on a computer or device that has not previously been used.

2/15/19: Hi Robinson, Did you know? Hunter doesn’t have only one Chrome extension! We recently built a simple email tracker for Gmail.

This is where the data on the MacBook that would end up in Mac Isaac’s shop started getting deleted.

2/15/19: Robert’s MacBook is being erased. The erase of Robert’s MacBook started at 4:18 PM PST on February 15, 2019.

2/15/19: Robert’s MacBook Pro has been locked. This Mac was locked at 8:36 PM PST on February 15, 2019.

2/19/19: Noiseless MacPhun LLC

2/20/19: where the fuck are youi? from DroidHunter88 to dpagano:

this is hunter

i dont have your #

call me please

The droidhunter88 account bought a new iPhone — but, after telling Apple they would recycle the old one, instead kept it. That would effectively be another device associated with Hunter Biden. Given some of the other apps involved, this may have served as a way to get Hunter Biden’s calls (eg, from Mac Isaac). Unlike the new devices that show up in 2018, this one was paid for.

2/21/19: New device signed in to [email protected] Your Google Account was just signed in to from a new Apple iPhone device.

2/21/19: Hi Robinson, Welcome to Google on your new Apple iPhone (tied to droidhunter88)

2/28/19: Your items are ready for pickup.Order Number: W776795632Ordered on: February 28, 2019

2/28/19: Your trade-in has been initiated. Thanks for using Apple GiveBack.

3/1/19: Your Apple ID ([email protected]) was used to sign in to iCloud on an iPhone XR. Date and Time: March 1, 2019, 8:52 AM PST

3/5/19: Recently you reported an issue with Polarr Photo Editor, Polarr Photo Editor Yearly using iTunes Report a Problem

3/7/19: Your Apple ID, [email protected], was just used to download Lovense [sic] Remote from the App Store on a computer or device that has not previously been used.

3/9/19: New sign-in to your linked account [email protected] Your Google Account was just signed in to from a new Apple iPhone device.

3/9/19: Promise Me, Dad: A Year of Hope, Hardship, and Purpose (Unabridged)

3/13/19: Your Apple ID ([email protected]) was used to sign in to iCloud via a web browser. Date and Time: March 13, 2019, 5:43 PM PDT

3/16/19: The following changes to your Apple ID, [email protected] were made on March 16, 2019 at 11:59:16 PM EDT:Email address(es)

Droidhunter88 is added back to Hunter’s iCloud contact again.

3/17/19: You recently added [email protected] as a new alternate email address for your Apple ID.

3/17/19: The following changes to your Apple ID, [email protected] were made on March 17, 2019 at 12:02:06 AM EDT: Email address(es)

3/17/19: We haven’t received your device.